Configure Front-end Private Service Connect

This page covers configuration steps for inbound private connectivity between users and their Databricks workspaces. To enhance the security of your workspace's serverless implementation, you must use inbound private connectivity.

- For an overview of private connectivity at Databricks, see Classic compute plane networking.

- To enable classic compute plane private connectivity to Databricks, see Enable Private Service Connect for your workspace.

Enable inbound Private Service Connect for your workspace

Establish secure, private connections from your Google Cloud VPCs or on-premises networks to Databricks services using inbound Private Service Connect, which routes traffic through a VPC interface endpoint instead of the public internet.

With inbound Private Service Connect, you can:

- Configure private access: Inbound Private Service Connect supports connections to the Databricks web application, REST API, and Databricks Connect API.

- Enable private access: Configure inbound Private Service Connect during the creation of a new workspace or enable it on an existing one.

- Enforce mandatory private connectivity: To enforce private connections for a workspace, you must configure private connectivity from users to Databricks.

To use the REST API, see the Private Access Settings API reference.

Requirements and limitations

To enable inbound Private Service Connect, you must meet the following requirements:

- Your Databricks account must be on the Enterprise plan.

- New workspaces only: You must add Private Service Connect connectivity when you create the workspace. You can't add Private Service Connect connectivity to an existing workspace.

- Customer-managed VPC is required: You must use a customer-managed VPC. You must create your VPC in the Google Cloud console or another tool. When the VPC is available, use the Databricks account console or API to create a network configuration. This configuration must reference your new VPC and include specific settings for Private Service Connect.

- Quotas: You can configure up to ten Private Service Connect endpoints per region per VPC host project for Databricks. Multiple Databricks workspaces in the same VPC and region must share these endpoints because Private Service Connect endpoints are region-specific resources. If this quota presents a limitation for your setup, contact your account team.

- No cross-region connectivity: Private Service Connect workspace components must be in the same region including:

- Transit VPC network and subnets.

- Compute plane VPC network and subnets.

- Databricks workspace.

- Private Service Connect endpoints.

- Private Service Connect endpoint subnets.

Multiple options for network topology

When you deploy a private Databricks workspace, you must choose one of the following network configuration options:

- Host Databricks users (clients) and the Databricks compute plane on the same network: In this option, the transit VPC and compute plane VPC refer to the same underlying VPC network. If you choose this topology, all access to any Databricks workspace from that VPC must go over the inbound Private Service Connect connection for that VPC. See Requirements and limitations.

- Host Databricks users (clients) and the Databricks compute plane on separate networks: In this option, the user or application client can access different Databricks workspaces using different network paths. Optionally allow a user on the transit VPC to access a private workspace over a Private Service Connect connection and also allow users on the public internet to access the workspace.

You cannot mix workspaces that use inbound Private Service Connect with those that do not within the same transit VPC. Although you can share one transit VPC across multiple workspaces, all workspaces in the transit VPC must be of the same type, either all using inbound Private Service Connect, or none using it. This requirement is due to the specifics of DNS resolution on Google Cloud.

Regional service attachments reference

To enable inbound Private Service Connect, you need the service attachment URIs for the workspace endpoint for your region. The URI ends with the suffix plproxy-psc-endpoint-all-ports. This endpoint has a dual role. It is used by inbound Private Service Connect to connect your transit VPC to the workspace web application and REST APIs, and it is also used by classic compute plane Private Service Connect to connect to the control plane for REST APIs.

To find the workspace endpoint and service attachment URI for your region, see IP addresses and domains for Databricks services and assets.

Step 1: Create a subnet

In the compute plane VPC network, create a subnet specifically for Private Service Connect endpoints. The following instructions assume that you're using the Google Cloud console, but you can also use the gcloud CLI to perform similar tasks.

To create a subnet:

-

In the Google Cloud cloud console, go to the VPC list page.

-

Click Add subnet.

-

Set the name, description, and region.

-

If the Purpose field is visible (it might not be visible), choose None:

-

Set a private IP range for the subnet, such as

10.0.0.0/24. You must allocate enough IP spaces to host your Private Service Connect endpoints. Your IP ranges cannot overlap for any of the following:- Subnet of BYO VPC.

- Subnet that holds the Private Service Connect endpoints.

-

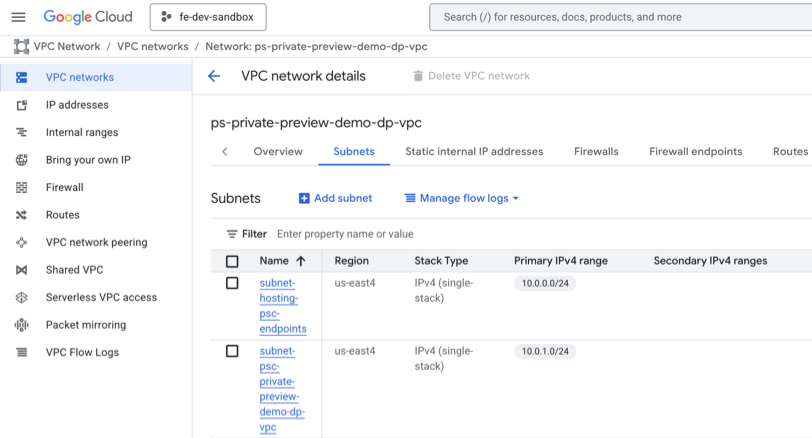

Confirm that your subnet was added to the VPC view in the Google Cloud console for your VPC:

Step 2: Configure inbound private access

To configure private access from Databricks clients for inbound Private Service Connect:

-

Create a transit VPC network or reuse an existing one.

-

Create or reuse a subnet with a private IP range that has access to the inbound Private Service Connect endpoint.

importantVerify that your users have access to VMs or compute resources on that subnet.

-

Create a VPC endpoint from the transit VPC to the workspace (

plproxy-psc-endpoint-all-ports) service attachment.To get the full name to use for your region, see IP addresses and domains for Databricks services and assets.

Step 3: Register your VPC endpoints

Register your Google Cloud endpoints using the Databricks account console. You can also use the VPC Endpoint Configurations API.

- Go to the Databricks account console.

- Click the Cloud resources tab, then VPC endpoints.

- Click Register VPC endpoint.

- For each of your Private Service Connect endpoints, fill in the required fields to register a new VPC endpoint:

- VPC endpoint name: A human-readable name to identify the VPC endpoint. Databricks recommends using the same name as your Private Service Connect endpoint ID, but it is not required that these match.

- Region: The Google Cloud region where this Private Service Connect endpoint is defined.

- Google Cloud VPC network project ID: The Google Cloud project ID where this endpoint is defined. This is the project ID of the VPC where user connections originate, which is sometimes referred to as a transit VPC.

The following table shows the information you need for the endpoint.

Endpoint type | Field | Example |

|---|---|---|

Inbound transit VPC endpoint ( | VPC endpoint name (Databricks recommends matching the Google Cloud endpoint ID) |

|

Google Cloud VPC network project ID |

| |

Google Cloud Region |

|

When you are done, use the VPC endpoints list in the account console to review the list of endpoints and confirm the information.

Step 4: Create a Databricks private access settings object

Create a private access settings object that defines several Private Service Connect settings for your workspace. This object is attached to your workspace. You can attach one private access settings object to multiple workspaces.

-

As an account admin, go to the account console.

-

In the sidebar, click Security.

-

Click Private Access Settings.

-

Click Add private access setting.

-

Enter a name for your new private access settings object.

-

Select the same region as your workspace.

-

Set the Public access enabled option. This cannot be changed after the private access settings object is created.

- If public access is enabled, users can configure the IP access lists to allow or block public access (from the public internet) to the workspaces that use this private access settings object.

- If public access is disabled, no public traffic can access the workspaces that use this private access settings object. The IP access lists do not affect public access.

In both cases, IP access lists cannot block private traffic from Private Service Connect because the access lists only control access from the public internet.

-

Select a Private Access Level that restricts access to authorized Private Service Connect connections:

- Account: Any VPC endpoints registered with your Databricks account can access this workspace. This is the default value.

- Endpoint: Only the VPC endpoints that you specify can access the workspace. If you choose this value, choose from among your registered VPC endpoints.

-

Click Add private access setting.

Step 5: Create a network configuration

Create a Databricks network configuration that contains information about your customer-managed VPC for your workspace. This object is attached to your workspace. You can also use the Network configurations API.

- Go to the Databricks account console.

- Click the Cloud resources tab, then Network configurations.

- Click Add Network configuration.

The following table shows the information you need to use for each endpoint.

Field | Example value |

|---|---|

Network configuration name |

|

Network GCP project ID |

|

VPC Name |

|

Subnet Name |

|

Region of the subnet |

|

VPC endpoint for secure cluster connectivity relay |

|

VPC endpoint for REST APIs (classic compute plane Private Service Connect connection to workspace) |

|

Step 6: Create a workspace

Create a workspace that uses the network configuration that you created using the account console. You can also use the Workspaces API.

-

Go to the Databricks account console.

-

Click the Workspaces tab.

-

Click Create workspace.

-

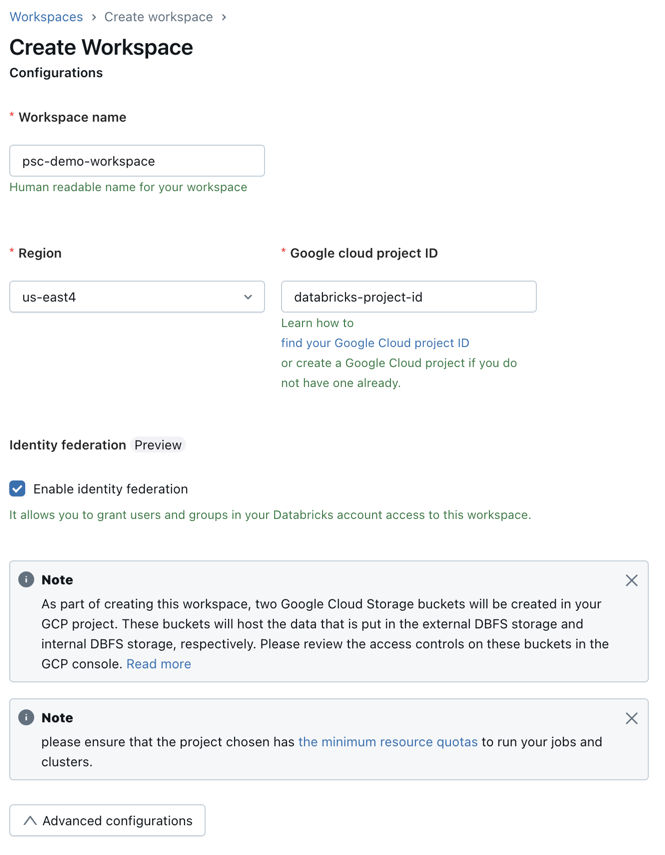

Set these standard workspace fields:

- Workspace name.

- Workspace region.

- Google Cloud project ID (the project for the workspace's compute resources, which might be different than the project ID for your VPC).

-

Set Private Service Connect specific fields:

- Click Advanced configurations.

- In the Network configuration field, choose the network configuration that you created in previous steps.

- In the Private connectivity field, choose the private access settings object that you created in the previous steps. You can attach one private access settings object to multiple workspaces.

-

Click Save.

Step 7: Validate the workspace configuration

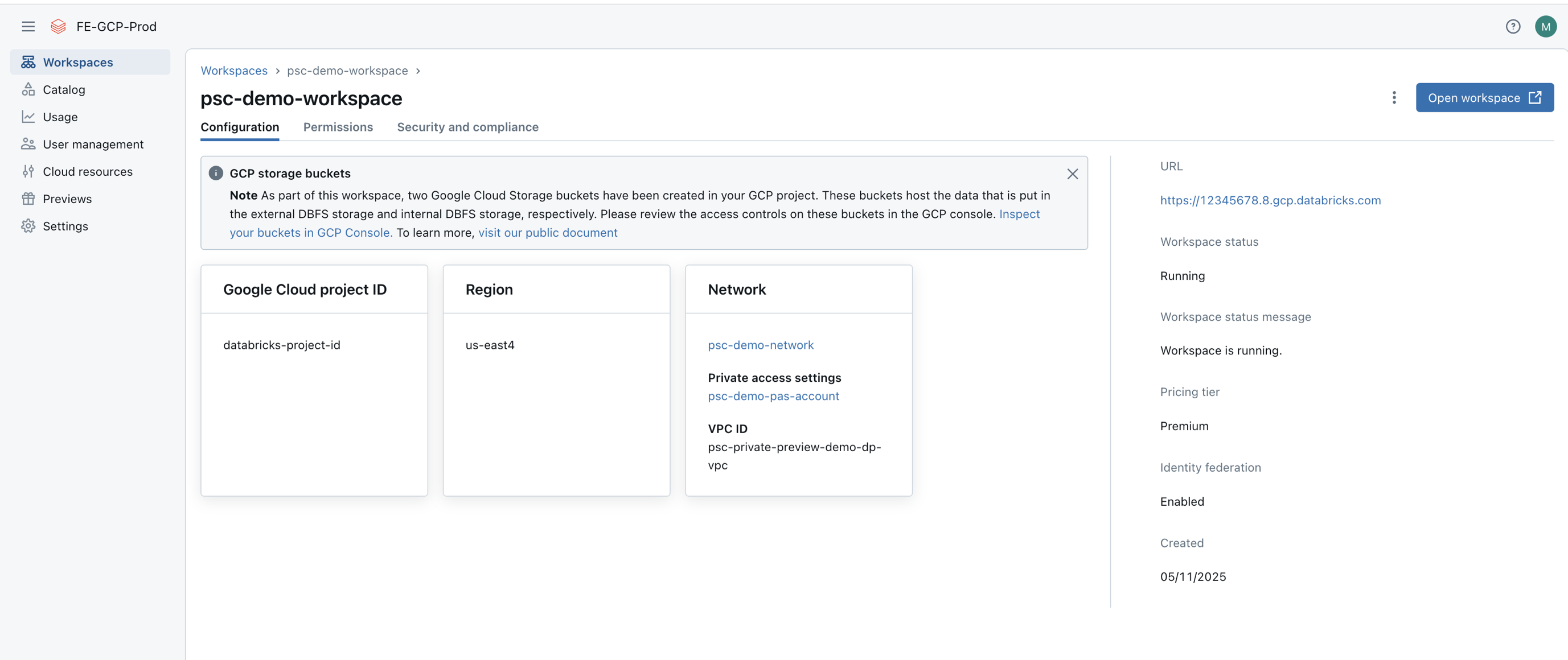

After you create the workspace, return to the workspace page and find your newly created workspace. It typically takes between 30 seconds and 3 minutes for the workspace to transit from PROVISIONING status to RUNNING status. After the status changes to RUNNING, your workspace is configured successfully.

Validate the configuration using the Databricks account console:

-

Click Cloud resources and then Network configurations. Find the network configuration for your VPC and confirm that all fields are correct.

-

Click Workspaces and find the workspace. Confirm that the workspace is running:

If you want to review the set of workspaces using the API, make a GET request to the https://accounts.gcp.databricks.com/api/2.0/accounts/<account-id>/workspaces endpoint. See Get all workspaces.

Step 8: Configure DNS

Create a private DNS zone for inbound connectivity. Although you can share one transit VPC across multiple workspaces in the same region, it must exclusively contain either workspaces using inbound PSC or those that do not, because Google Cloud's DNS resolution does not support mixing both types in a single transit VPC.

-

Verify that you have the workspace URL for your deployed Databricks workspace, in the format https://33333333333333.3.gcp.databricks.com. Get this URL from the web browser when you are viewing a workspace or from the account console in its list of workspaces.

-

Create a private DNS zone that includes the transit VPC network. On the Cloud DNS page in Google Cloud Console, click CREATE ZONE.

- In the DNS name field, enter

gcp.databricks.com. - In the Networks field, choose your transit VPC network.

- Click CREATE.

- In the DNS name field, enter

-

Create DNS

Arecords to map your workspace URL to theplproxy-psc-endpoint-all-portsPrivate Service Connect endpoint IP.- Locate the Private Service Connect endpoint IP for the

plproxy-psc-endpoint-all-portsPrivate Service Connect endpoint. In this example, suppose the IP for the Private Service Connect endpointpsc-demo-user-cpis10.0.0.2. - Create an

Arecord to map the workspace URL to the Private Service Connect endpoint IP. In this case, map your unique workspace domain name (such as33333333333333333.3.gcp.databricks.com) to the IP address for the Private Service Connect endpoint, which in our previous example was10.0.0.2but your number might be different. - Create an

Arecord to mapdp-<workspace-url>to the Private Service Connect endpoint IP. In this case, using the example workspace URL it would mapdp-333333333333333.3.gcp.databricks.comto10.0.0.2, but those values might be different for you.

- Locate the Private Service Connect endpoint IP for the

-

If users use a web browser in the user VPC to access the workspace, to support authentication, you must create an

Arecord to map<workspace-gcp-region>.psc-auth.gcp.databricks.comto10.0.0.2. In this case, mapus-east4.psc-auth.gcp.databricks.comto10.0.0.2. For inbound connectivity, this step is typically required; however, if you plan to establish inbound connectivity from the transit network only for REST APIs (not web browser user access), you can omit this step.

Your zone's inbound DNS configuration with A records that map to your workspace URL and the Databricks authentication service looks generally like the following:

Validate your DNS configuration

In your transit VPC network, use the nslookup tool to confirm that the following URLs now resolve to the inbound Private Service Connect endpoint IP.

<workspace-url>dp-<workspace-url><workspace-gcp-region>.psc-auth.gcp.databricks.com

Intermediate DNS name for Private Service Connect

The intermediate DNS name for workspaces that enable either classic compute plane or inbound Private Service Connect is <workspace-gcp-region>.psc.gcp.databricks.com. This allows you to separate out traffic for the workspaces that they need to access, from other Databricks services that do not support Private Service Connect, like the account console.

Step 9 (optional): Configure IP access lists

By default, inbound connections to Private Service Connect workspaces allow public access. You can control public access by creating a private access settings object. Learn more.

Follow these steps to manage public access:

-

Decide on public access:

- Deny public access: No public connections to the workspace are allowed.

- Allow public access: You can further restrict access using IP access lists.

-

If you allow public access, configure IP Access Lists:

- Set up IP access lists to control which public IP addresses can access your Databricks workspace.

- IP access lists only affect requests from public IP addresses over the internet. They do not block private traffic from Private Service Connect.

-

To block all internet access:

- Enable IP access lists for your workspace. See Configure IP access lists for workspaces

- Create an IP access list rule:

BLOCK 0.0.0.0/0.

IP access lists do not affect requests from VPC networks connected via Private Service Connect. These connections are managed using the Private Service Connect access level configuration. See Step 4: Create a Databricks private access settings object.

Step 10 (optional): Configure VPC Service Controls

Enhance your private connectivity to Databricks by implementing VPC Service Controls alongside Private Service Connect which provides an additional layer of security to keep traffic private. This combined approach effectively mitigates data exfiltration risks by isolating your Google Cloud resources and controlling access to your VPC networks.

Configure classic compute plane private access from the compute plane VPC to Cloud Storage

Configure Private Google Access or Private Service Connect to privately access cloud storage resources from your compute plane VPC.

Add your compute plane projects to a VPC Service Controls Service Perimeter

For each Databricks workspace, add the following Google Cloud projects to a VPC Service Controls service perimeter:

- Compute plane VPC host project

- Project containing the workspace storage bucket

- Service projects containing the compute resources of the workspace

With this configuration, you must grant access to both of the following:

- The compute resources and workspace storage bucket from the Databricks control plane

- Databricks-managed storage buckets from the compute plane VPC

Grant the above access with the following ingress and egress rules on the above VPC Service Controls service perimeter.

To get the project numbers for these ingress and egress rules, see IP addresses and domains for Databricks services and assets.

Ingress rule

You must add an ingress rule to grant access to your VPC Service Controls Service Perimeter from the Databricks control plane VPC. The following is an example ingress rule:

From:

Identities: ANY_IDENTITY

Source > Projects =

<us-central1-control-plane-vpc-host-project-numbers> # Only required for workspace creation

<regional-control-plane-vpc-host-project-numbers>

<regional-control-plane-uc-project-number>

<regional-control-plane-audit-log-delivery-project-number>

To:

Projects =

<list of compute plane Project Ids>

Services =

Service name: storage.googleapis.com

Service methods: All actions

Service name: compute.googleapis.com

Service methods: All actions

Service name: container.googleapis.com

Service methods: All actions

Service name: logging.googleapis.com

Service methods: All actions

Service name: cloudresourcemanager.googleapis.com

Service methods: All actions

Service name: iam.googleapis.com

Service methods: All actions

To get the project numbers for your ingress rules, see Private Service Connect (PSC) attachment URIs and project numbers.

Egress rule

You must add an egress rule to grant access to Databricks-managed storage buckets from the compute plane VPC. The following is an example egress rule:

From:

Identities: ANY_IDENTITY

To:

Projects =

<regional-control-plane-asset-project-number>

<regional-control-plane-vpc-host-project-numbers>

Services =

Service name: storage.googleapis.com

Service methods: All actions

Service name: artifactregistry.googleapis.com

Service methods:

artifactregistry.googleapis.com/DockerRead'

To get the project numbers for your egress rules, see Private Service Connect (PSC) attachment URIs and project numbers.

Access data lake storage buckets secured by VPC Service Controls

Add the Google Cloud projects containing the data lake storage buckets to a VPC Service Controls Service Perimeter.

You do not require any additional ingress or egress rules if the data lake storage buckets and the Databricks workspace projects are in the same VPC Service Controls Service Perimeter.

If the data lake storage buckets are in a separate VPC Service Controls Service Perimeter, you must configure the following:

- Ingress rules on data lake Service Perimeter:

- Allow access to Cloud Storage from the Databricks compute plane VPC

- Allow access to Cloud Storage from the Databricks control plane VPC using the project IDs documented on the regions page. This access is required as Databricks introduces new data governance features such as Unity Catalog.

- Egress rules on Databricks compute plane Service Perimeter:

- Allow egress to Cloud Storage on data lake Projects