MLflow 3 traditional ML workflow

Example notebook

The example notebook runs a model training job, which is tracked as an MLflow Run, to produce a trained model, which is tracked as an MLflow Logged Model.

MLflow 3 traditional ML model notebook

Explore model parameters and performance using the MLflow UI

To explore the model in the MLflow UI:

-

Click Experiments in the workspace sidebar.

-

Find your experiment in the experiments list. You can select the Only my experiments checkbox or use the Filter experiments search box to filter the list of experiments.

-

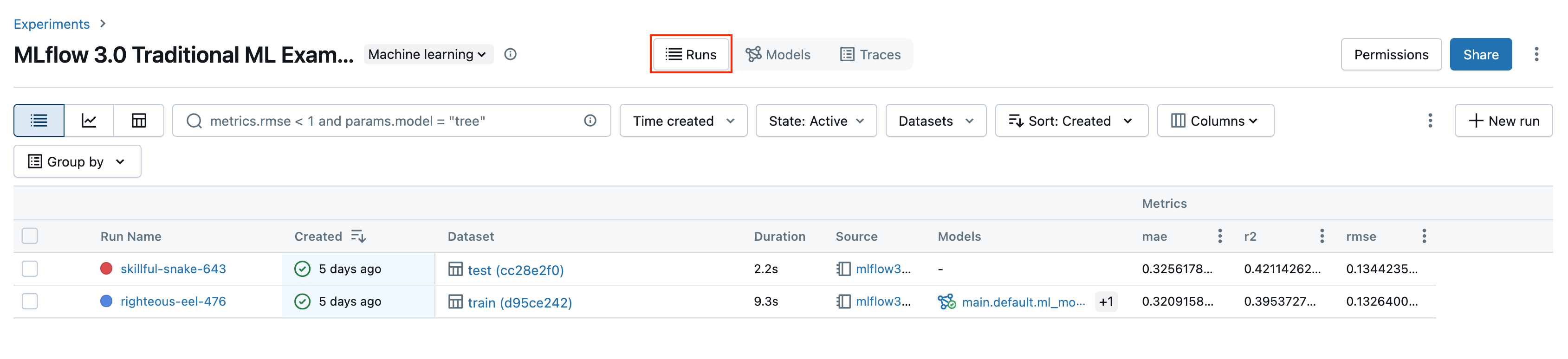

Click the name of your experiment. The Runs page opens. The experiment contains two MLflow runs, one used to train the model and one used to evaluate the model.

-

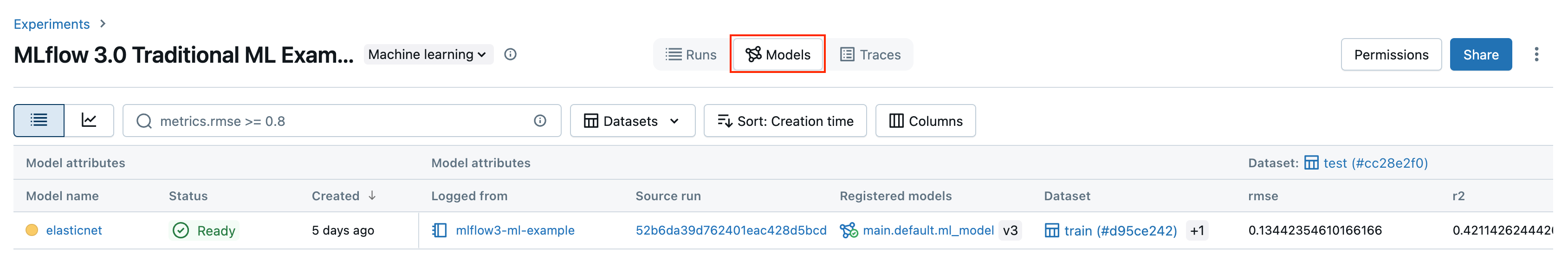

Click the Models tab. The

LoggedModel(elasticnet) is tracked on this screen. You can see all of the parameters and metadata, as well as all of the metrics linked from the training and evaluation runs.

-

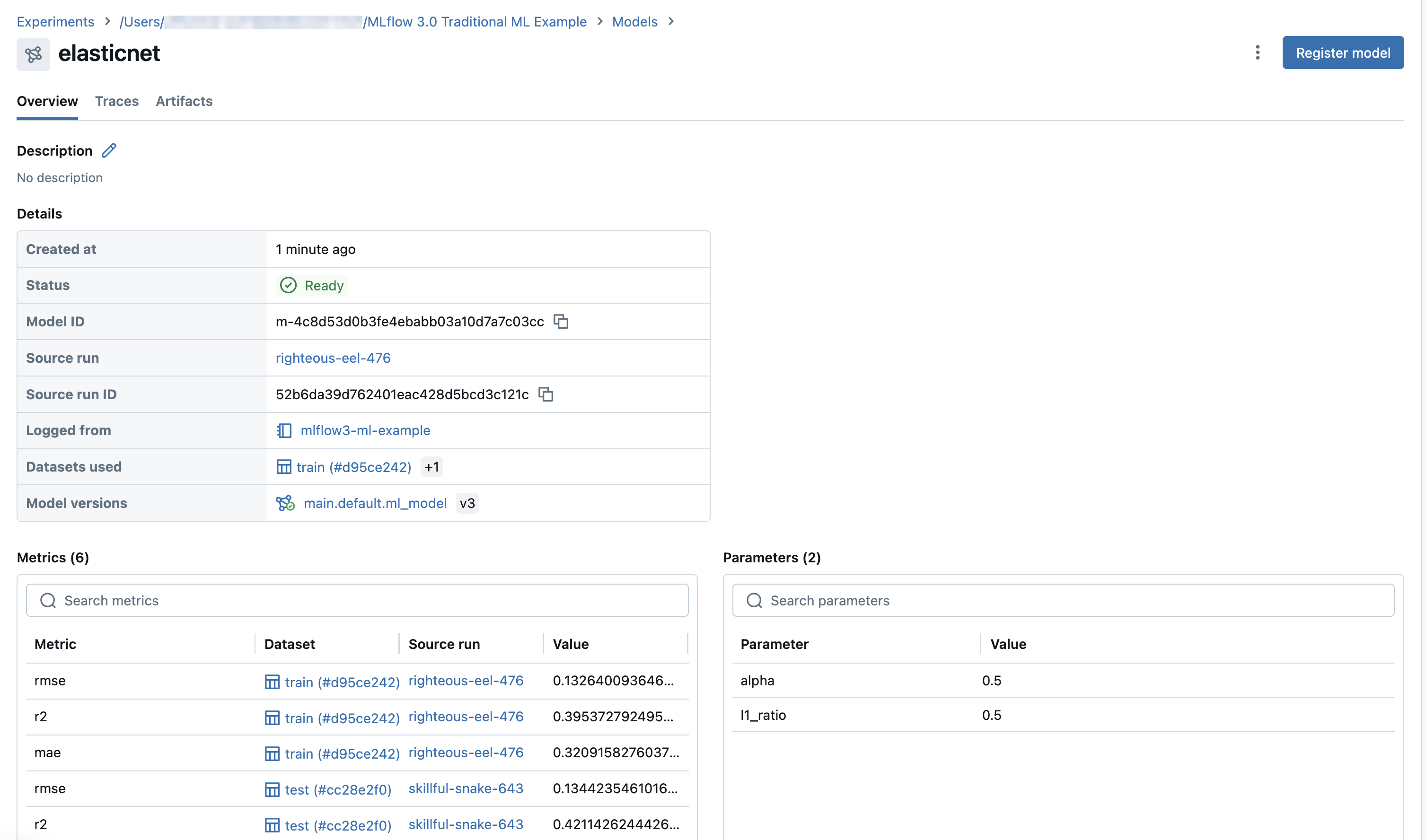

Click the model name to display the model page, which contains information like the model's parameters and metrics, as well as details such as its source run, relevant datasets, and model versions registered in Unity Catalog.

-

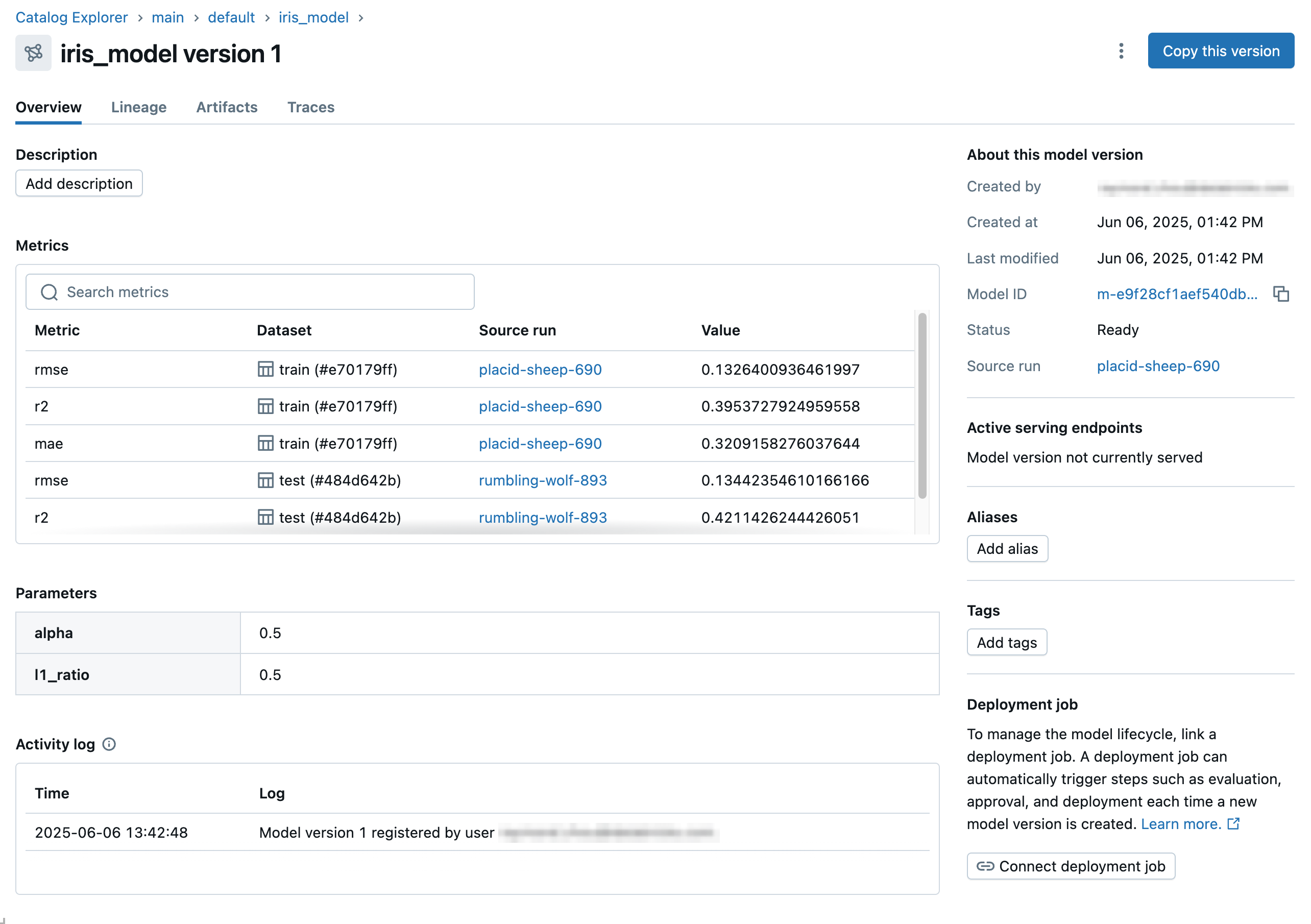

The notebook registers the model to Unity Catalog. As a result, all model parameters and performance data are available on the model version page in Catalog Explorer. You can get to this page directly by clicking on the model version from the MLflow model page. Clicking on the model ID and source run here will bring you back to the MLflow model and run pages respectively.

What's the difference between the Models tab on the MLflow experiment page and the model version page in Catalog Explorer?

The Models tab of the experiment page and the model version page in Catalog Explorer show similar information about the model. The two views have different roles in the model development and deployment lifecycle.

- The Models tab of the experiment page presents the results of logged models from an experiment on a single page. The Charts tab on this page provides visualizations to help you compare models and select the model versions to register to Unity Catalog for possible deployment.

- In Catalog Explorer, the model version page provides an overview of all model performance and evaluation results. This page shows model parameters, metrics, and traces across all linked environments including different workspaces, endpoints, and experiments. This is useful for monitoring and deployment, and works especially well with deployment jobs. The evaluation task in a deployment job creates additional metrics that appear on this page. The approver for the job can then review this page to assess whether to approve the model version for deployment.

Next steps

To learn more about LoggedModel tracking introduced in MLflow 3, see the following article:

To learn more about using MLflow 3 with deep learning workflows, see the following article: