Hive metastore federation: enable Unity Catalog to govern tables registered in a Hive metastore

This article introduces Hive metastore federation, a feature that enables Unity Catalog to govern tables that are stored in a Hive metastore. You can federate an external Hive metastore, AWS Glue, or a legacy internal Databricks Hive metastore.

Hive metastore federation can be used for the following use cases:

-

As a step in the migration path to Unity Catalog, enabling incremental migration without code adaptation, with some of your workloads continuing to use data registered in your Hive metastore while others are migrated.

This use case is most suited for organizations that use a legacy internal Databricks Hive metastore today, because federated internal Hive metastores allow both read and write workloads.

-

To provide a longer-term hybrid model for organizations that must maintain some data in a Hive metastore alongside their data that is registered in Unity Catalog.

This use case is most suited for organizations that use an external Hive metastore or AWS Glue today, because foreign catalogs for these Hive metastores are read-only.

Overview of Hive metastore federation

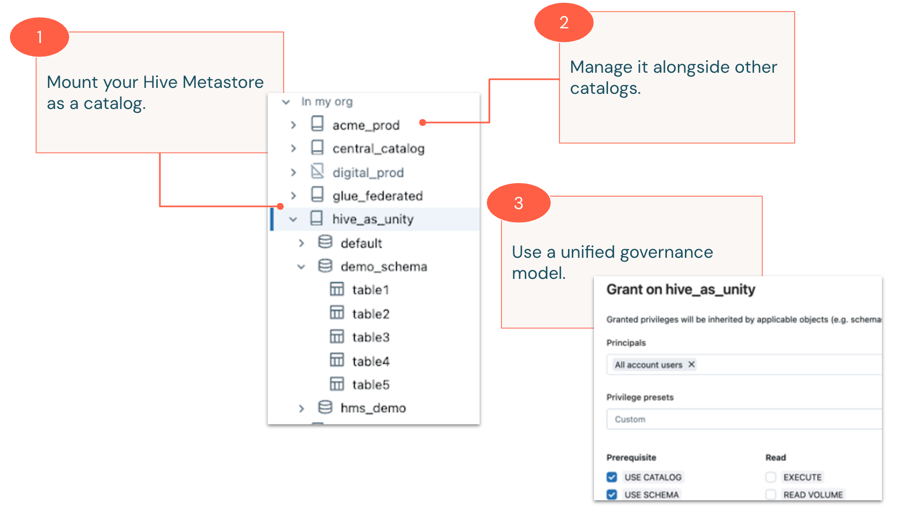

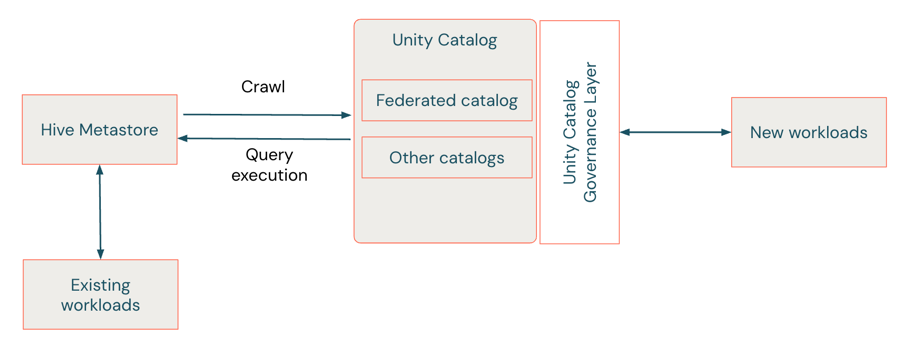

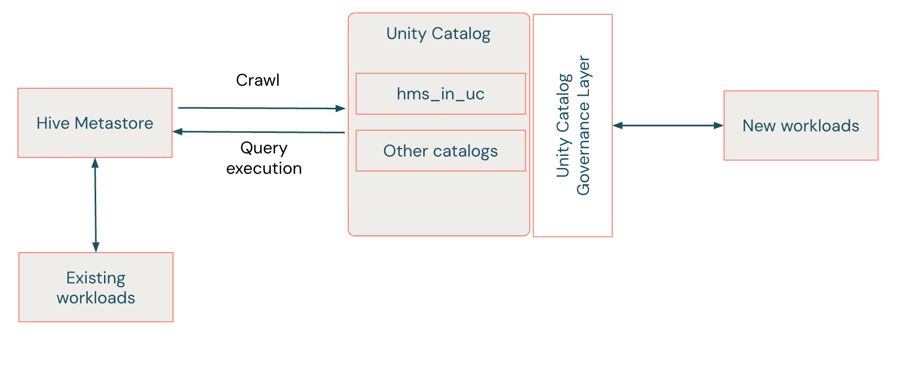

In Hive metastore federation, you create a connection from your Databricks workspace to your Hive metastore, and Unity Catalog crawls the Hive metastore to populate a foreign catalog, sometimes called a federated catalog, that enables your organization to work with your Hive metastore tables in Unity Catalog, providing centralized access controls, lineage, search, and more.

Federated Hive metastores that are external to your Databricks workspace, including AWS Glue, allow reads using Unity Catalog. Internal Hive metastores allow reads and writes, updating the Hive metastore metadata as well as the Unity Catalog metadata when you modify metadata.

When you query foreign tables in a federated Hive metastore, Unity Catalog provides the governance layer, performing functions such as access control checks and auditing, while queries are executed using Hive metastore semantics. For example, if a user queries a table stored in Parquet format in a foreign catalog, then:

- Unity Catalog checks if the user has access to the table and infers lineage for the query.

- The query itself runs against the underlying Hive metastore, leveraging the latest metadata and partition information stored there.

How does Hive metastore federation compare to using Unity Catalog external tables?

Unity Catalog has the ability to create external tables, taking data that already exists in an arbitrary cloud storage location and registering it in Unity Catalog as a table. This section explores the differences between external and foreign tables in a federated Hive metastore.

Both table types have the following properties:

- Can be used to register an arbitrary location in cloud storage as a table.

- Can apply Unity Catalog permissions and fine-grained access controls.

- Can be viewed in lineage for queries that reference them.

Foreign tables in a federated Hive metastore have the following properties:

- Are automatically discovered based on crawling a Hive metastore. As soon as tables are created in the Hive metastore, they are surfaced and available to query in the Unity Catalog foreign catalog.

- Allow tables to be defined with Hive semantics such as Hive SerDes and partitions.

- Allow tables to have overlapping paths with other tables in foreign catalogs.

- Allow tables to be located in DBFS root locations.

- Include views that are defined in Hive metastore.

In this way you can think of foreign tables in a federated Hive metastore as offering backwards compatibility with Hive metastore, allowing workloads to use Hive-only semantics but with governance provided by Unity Catalog.

However, some Unity Catalog features are not available on foreign tables, for example:

- Features available only for Unity Catalog managed tables, such as predictive optimization.

- Vector search, Delta Sharing, data quality monitoring, and online tables.

- Some feature store functionality, including feature store creation, model serving creation, feature spec creation, model logging and batch scoring.

Performance can be marginally worse than workloads on Unity Catalog or Hive metastore because both Hive metastore and Unity Catalog are on the query path of a foreign table.

For more information about supported functionality, see Requirements and feature support.

What does it mean to write to a foreign catalog in a federated Hive metastore?

Writes are supported only for federated internal Hive metastores, not external Hive metastores or AWS Glue.

Writes to federated metastores are of two types:

-

DDL operations such as

CREATE TABLE,ALTER TABLE, andDROP TABLE.DDL operations are synchronously reflected in the underlying Hive metastore. For example, running a

CREATE TABLEstatement creates the table in both the Hive metastore and the foreign catalog.warningThis also means that

DROPcommands are reflected in the Hive metastore. For example,DROP SCHEMA mySchema CASCADEdrops all tables in the underlying Hive metastore schema, without the option toUNDROP, because Hive metastore does not supportUNDROP. -

DML operations such as

INSERT,UPDATE, andDELETE.DML operations are also synchronously reflected in the underlying Hive metastore table. For example, running

INSERT INTOadds records to the table in the Hive metastore.Write support is a key to enabling a seamless transition during migration from Hive metastore to Unity Catalog. See How do you use Hive metastore federation during migration to Unity Catalog?.

How do you set up Hive metastore federation?

To set up Hive metastore federation, you do the following:

-

Create a connection in Unity Catalog that specifies the path and credentials for accessing the Hive metastore.

Hive metastore federation uses this connection to crawl the Hive metastore. For most database systems, you supply a username and password. For AWS Glue, you supply an IAM role. For a connection to a legacy internal Databricks workspace Hive metastore, Hive metastore federation takes care of authorization.

-

Create a storage credential and an external location in Unity Catalog for the paths to the tables registered in the Hive metastore.

External locations contain paths and the storage credentials required to access those paths. Storage credentials are Unity Catalog securable objects that specify credentials, such as IAM roles, for access to cloud storage. Depending on the workflow you choose for creating external locations, you might have to create storage credentials before you create the external location.

-

Create a foreign catalog in Unity Catalog, using the connection that you created in step 1.

This is the catalog that workspace users and workflows use to work with Hive metastore tables using Unity Catalog. After you've created the foreign catalog, Unity Catalog populates it with the tables registered in the Hive metastore.

-

Grant privileges to the tables in the foreign catalog using Unity Catalog.

You can also use Unity Catalog row and column filters for fine-grained access control.

-

Start querying data.

Access to foreign tables using Unity Catalog is read-only for external Hive metastores and AWS Glue metastores, and read-and-write for internal Hive metastores.

For internal Hive metastores, external Hive metastores, and Glue metastores, Unity Catalog continuously updates table metadata as it changes in the Hive metastore. For internal Hive metastores, new tables and table updates committed from the foreign catalog are written back to the Hive metastore, maintaining full interoperability between the Unity Catalog and Hive metastore catalogs.

For detailed instructions, see:

- Enable Hive metastore federation for a legacy workspace Hive metastore

- Enable Hive metastore federation for an external Hive metastore

- Enable Hive metastore federation for AWS Glue metastores

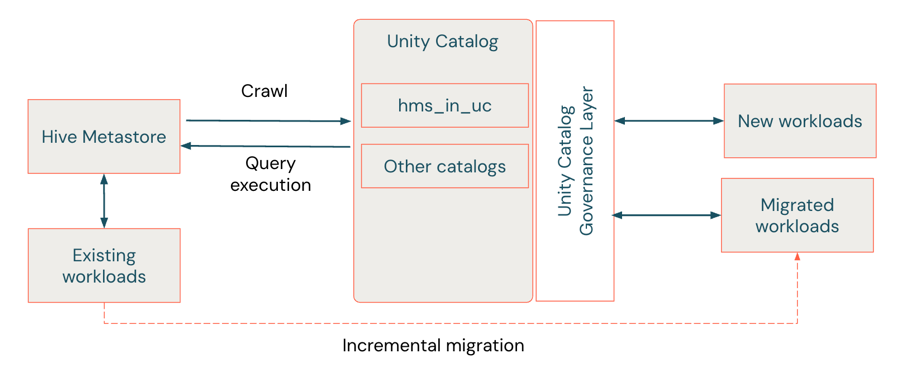

How do you use Hive metastore federation during migration to Unity Catalog?

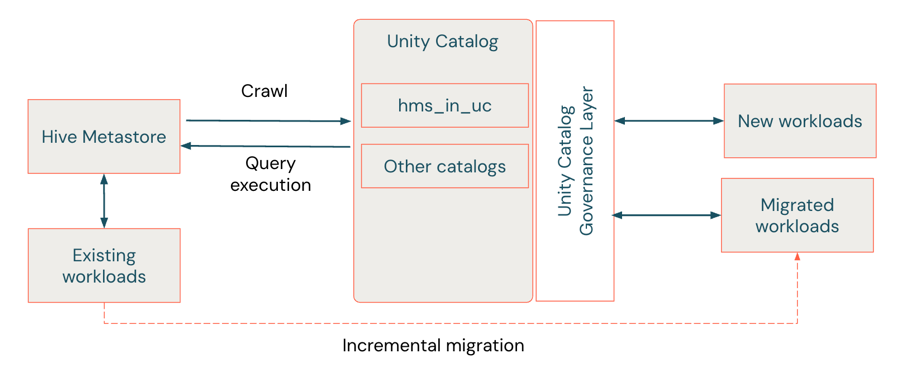

Hive metastore federation lets you migrate to Unity Catalog incrementally by reducing the need for coordination between teams and workloads. In particular, if you are migrating from your Databricks workspace's internal Hive metastore, the ability to read from and write to both the Hive metastore and the Unity Catalog metastore means that you can maintain “mirrored” metastores during your migration, providing the following benefits:

- Workloads that run against foreign catalogs run in Hive metastore compatibility mode, reducing the cost of code adaptation during migration.

- Each workload can choose to migrate independently of others, knowing that, during the migration period, data will be available in both Hive metastore and Unity Catalog, alleviating the need to coordinate between workloads that have dependencies on one another.

This section describes a typical workflow for migrating a Databricks workspace's internal legacy Hive metastore to Unity Catalog, with Hive metastore federation easing the transition. It does not apply to migrating an external Hive or AWS Glue metastore. Foreign catalogs for external Hive metastores do not support writes.

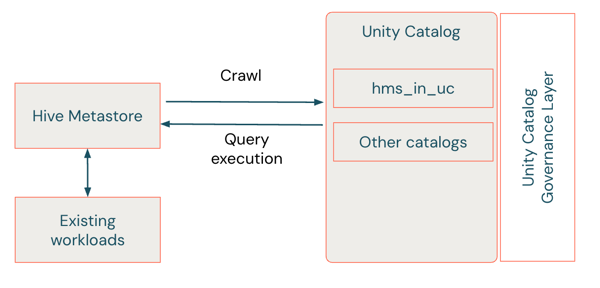

Step 1: Federate the internal Hive metastore

In this step, you create a foreign catalog that mirrors your Hive metastore in Unity Catalog. Let's call it hms_in_uc.

As part of the federation process, you set up external locations to provide access to the data in cloud storage. In migration scenarios in which some workloads are querying the data using legacy access mechanisms and other workloads are querying the same data in Unity Catalog, the Unity Catalog-managed access controls on external locations can prevent the legacy workloads from accessing the paths to storage from Unity Catalog-enabled compute. You can enable “fallback mode” on these external locations to fall back on any cluster- or notebook-scoped credentials that were defined for the legacy workload. Then when your migration is done, you turn fallback mode off. See What is fallback mode?.

For details, see Enable Hive metastore federation for a legacy workspace Hive metastore.

Step 2. Run new workloads against the foreign catalog in Unity Catalog

When you have a foreign catalog in place, you can grant SQL analysts and data science consumers access to it and start developing new workloads that point to it. The new workloads benefit from the additional feature set in Unity Catalog, including access controls, search, and lineage.

In this step, you typically do the following:

- Choose Unity Catalog-compatible compute (that is, standard or dedicated compute access modes, SQL warehouses, or serverless compute). See Requirements and feature support.

- Make the foreign catalog the default catalog on the compute resource or add

USE CATALOG hms_in_ucto the top of your code. Because schemas and table names in the foreign catalog are exact mirrors of those in the Hive metastore, your code will start referring to the foreign catalog.

Step 3. Migrate existing jobs to run against the foreign catalog

To migrate existing jobs to query the foreign catalog:

- Change the default catalog on the job cluster to be

hms_in_uc, either by setting a property on the cluster itself or by addingUSE CATALOG hms_in_ucat the top of your code. - Switch the job to standard or dedicated access mode compute and upgrade to one of the Databricks Runtime versions that supports Hive metastore federation. See Requirements and feature support.

- Ask a Databricks admin to grant the correct Unity Catalog privileges on the data objects in

hms_in_ucand on any cloud storage paths (included in Unity Catalog external locations) that the job accesses. See Manage privileges in Unity Catalog.

Step 4. Disable direct access to the Hive metastore

Once you've migrated all of your workloads to query the foreign catalog, you no longer need the Hive metastore.

-

Disable direct access to the Hive metastore.

See Disable access to the Hive metastore used by your Databricks workspace.

-

Prevent users from creating and using clusters that bypass table access control (clusters that use no isolation shared access mode or a legacy custom cluster type) using compute policies and the Enforce user isolation workspace setting.

See Compute configurations and Enforce user isolation cluster types on a workspace.

-

Make the federated catalog the workspace default catalog.

Frequently asked questions

The following sections provide more detailed information about Hive metastore federation.

What is fallback mode?

Fallback mode is a setting on external locations that you can use to bypass Unity Catalog permission checks during migration to Unity Catalog. Setting it ensures that workloads that haven't yet been migrated are not impacted during the setup phase.

Unity Catalog gains access to cloud storage using external locations, which are securable objects that define a path and a credential to access your cloud storage account. You can issue permissions on them, like READ FILES, to govern who can use the path. One challenge during the migration process is that you might not want Unity Catalog to start governing all access to the path immediately, for example, when you have existing, unmigrated workloads that reference the path.

Fallback mode allows you to delay the strict enforcement Unity Catalog access control on external locations. When fallback mode is enabled, workloads that access a path are first checked against Unity Catalog permissions, and if they fail, fall back to using cluster- or notebook-scoped credentials, such as instance profiles or Apache Spark configuration properties. This allows existing workloads to continue using their current credential.

Fallback mode is intended only for use during migration. You should turn it off when all workloads have been migrated and you are ready to enforce Unity Catalog access controls.

Query audit log for fallback usage

Use the following query to check if any access to the external location used fallback mode in the last 30 days. If there is no fallback-mode access in your account, Databricks recommends turning off fallback mode.

SELECT event_time, user_identity, action_name, request_params, response, identity_metadata

FROM system.access.audit

WHERE

request_params.fallback_enabled = 'true' AND

request_params.path LIKE '%some-path%' AND

event_time >= current_date() - INTERVAL 30 DAYS

LIMIT 10

What are authorized paths?

When you create a foreign catalog that is backed by Hive metastore federation, you are prompted to provide authorized paths to the cloud storage where the Hive metastore tables are stored. Any table that you want to access using Hive metastore federation must be covered by these paths. Databricks recommends that your authorized paths be sub-paths that are common across a large number of tables. For example, if you have tables at s3://bucket/table1, s3://bucket/table2, and s3://bucket/table3, you should provide s3://bucket/ as an authorized path.

You can modify the authorized paths for an existing catalog if you are the catalog owner, or you have MANAGE and USE CATALOG permissions.

To edit authorized paths for an existing catalog:

- In your Databricks workspace, click

Catalog.

- In the Catalog pane, find and click the federated catalog.

- On the catalog details page, click the

icon and select Edit authorized paths.

- Add or remove paths as needed.

- Click Save.

You can use UCX to help you identify the paths that are present in your Hive metastore.

Authorized paths add an extra layer of security on foreign catalogs that are backed by Hive metastore federation. They enable the catalog owner to apply guardrails to the data that users can access using federation. This is useful if your Hive metastore allows users to update metadata and arbitrarily alter table locations—updates that would otherwise be synchronized into the foreign catalog. In this scenario, users could potentially redefine tables that they already have access to so that they point to new locations that they would otherwise not have access to.

Can I federate Hive metastores using UCX?

UCX, the Databricks Labs project for migrating Databricks workspaces to Unity Catalog, includes utilities for enabling Hive metastore federation:

enable-hms-federationcreate-federated-catalog

See the project readme in GitHub. For an introduction to UCX, see Use the UCX utilities to upgrade your workspace to Unity Catalog.

Refresh metadata using Lakeflow Jobs

Unity Catalog automatically refreshes metadata for foreign tables at query time. If the external catalog's schema changes, Unity Catalog fetches the latest metadata when the query runs. This ensures that you always see the current schema and is optimal for most workloads.

However, Databricks recommends manually refreshing metadata in the following cases:

- To ensure consistency for foreign tables accessed by external engines. Paths that bypass Databricks Runtime don’t trigger automatic refreshes, which can result in stale metadata.

- To improve performance for workloads where you want to avoid metadata refresh during query execution. Refreshing metadata proactively allows queries to run faster using cached metadata. This is especially useful immediately after creating a foreign catalog because the first query would otherwise trigger a full refresh.

In these cases, schedule a periodic metadata refresh using a Lakeflow Job with the REFRESH FOREIGN SQL command. For example:

-- Refresh an entire catalog

> REFRESH FOREIGN CATALOG some_catalog;

-- Refresh a specific schema

> REFRESH FOREIGN SCHEMA some_catalog.some_schema;

-- Refresh a specific table

> REFRESH FOREIGN TABLE some_catalog.some_schema.some_table;

Configure the job to run at regular intervals depending on how often you anticipate external schema changes.

Requirements and feature support

The following table lists the services and features that are supported by Hive metastore federation. In some cases, unsupported services or features are also listed. In these tables, “HMS” stands for Hive metastore.

Category | Supported | Not supported |

|---|---|---|

Metastores |

| |

Operations |

| |

Hive metastore data assets |

|

|

Storage |

|

|

Compute types |

| No isolation clusters |

Compute versions |

| |

Unity Catalog features |

|

|

Working with shallow clones

Shallow clone support is in Public Preview.

Hive metastore federation supports the creation of shallow clones from tables registered in Hive metastore, with the following caveats:

-

When you read the shallow clone from a Hive metastore federated catalog, the clone has a

DEGRADEDprovisioning state. This indicates that the shallow clone uses the Hive permission model, which requires the user who reads from the shallow clone table to have theSELECTprivilege on both the shallow clone and the base table.To upgrade the shallow clone to be consistent with the Unity Catalog permission model, the table owner must run

REPAIR TABLE <table> SYNC METADATA. After the command is run, the table's provisioning state changes toACTIVEand permissions are controlled by Unity Catalog thereafter. Subsequent reads on the shallow clone requireSELECTonly on the shallow clone itself, as long as the command is run on compute that supports Unity Catalog. -

Shallow clones that are created in DBFS or based on tables that are mounted in DBFS are not supported.

Limitations

- You cannot query federated tables where table files are stored outside the federated table’s location. These could include tables where partitions are stored outside the table location, or in the case of Avro tables, where the schema is referenced using the

avro.schema.urltable property. When querying such tables, anUNAUTHORIZED_ACCESSorAccessDeniedExceptionexception may be thrown.