July 2019

These features and Databricks platform improvements were released in July 2019.

Releases are staged. Your Databricks account may not be updated until up to a week after the initial release date.

Coming soon: Databricks 6.0 will not support Python 2

In anticipation of the upcoming end of life of Python 2, announced for 2020, Python 2 will not be supported in Databricks Runtime 6.0. Earlier versions of Databricks Runtime will continue to support Python 2. We expect to release Databricks Runtime 6.0 later in 2019.

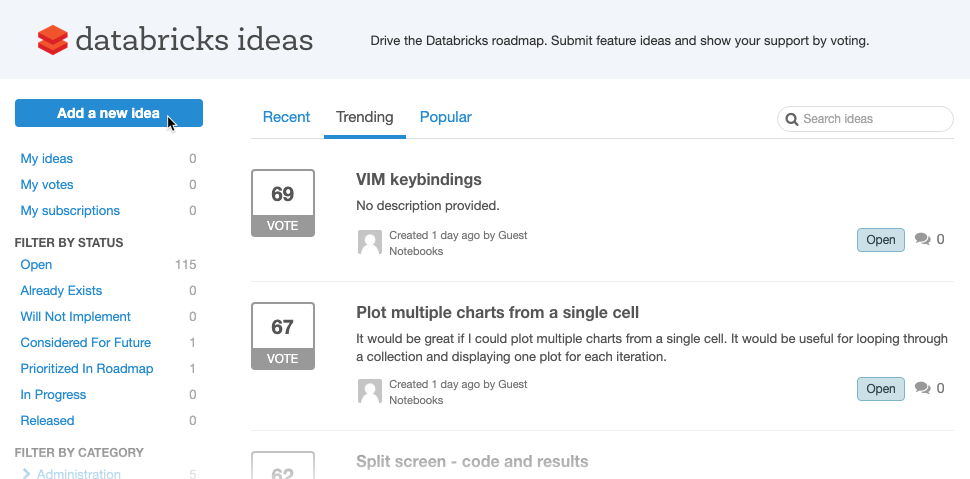

Ideas Portal

July 30, 2019

Our new Submit product feedback, powered by Aha!, lets you provide feedback directly to the Databricks Product team, getting your feature requests in front of decision-makers as they go through their product planning and development process. Use the Ideas Portal to:

- Enter feature requests.

- View, comment, and vote up other users' requests.

- Monitor the progress of your favorite ideas and watch them turn into features.

To get started, just click Help at the lower left of your Databricks workspace, select Feedback, and follow the prompts.

Preload the Databricks Runtime version on pool idle instances

July 30 - Aug 6, 2019: Version 2.103

You can now speed up pool-backed cluster launches by selecting a Databricks Runtime version to be loaded on idle instances in the pool. The field on the Pool UI is called Preloaded Spark Version.

Custom cluster tags and pool tags play better together

July 30 - Aug 6, 2019: Version 2.103

Earlier this month, Databricks introduced pools, a set of idle instances that help you spin up clusters fast. In the original release, pool-backed clusters inherited default and custom tags from the pool configuration, and you could not modify these tags at the cluster level. Now you can configure custom tags specific to a pool-backed cluster, and that cluster will apply all custom tags, whether inherited from the pool or assigned to that cluster specifically. You cannot add a cluster-specific custom tag with the same key name as a custom tag inherited from a pool (that is, you cannot override a custom tag that is inherited from the pool). For details, see Pool tags.

MLflow 1.1 brings several UI and API improvements

July 30 - Aug 6, 2019: Version 2.103

MLflow 1.1 introduces several new features to improve UI and API usability:

-

The runs overview UI now lets you browse through multiple pages of runs if the number of runs exceeds 100. After the 100th run, click the Load more button to load the next 100 runs.

-

The compare runs UI now provides a parallel coordinates plot. The plot allows you to observe relationships between an n-dimensional set of parameters and metrics. It visualizes all runs as lines that are color-coded based on the value of a metric (for example, accuracy), and shows the parameter values that each run took on.

-

Now you can add and edit tags from the run overview UI and view tags in the experiment search view.

-

The new MLflowContext API lets you create and log runs in a way that is similar to the Python API. This API contrasts with the existing low-level

MlflowClientAPI, which simply wraps the REST APIs. -

You can now delete tags from MLflow runs using the DeleteTag API.

For details, see the MLflow 1.1 blog post. For the complete list of features and fixes, see the MLflow Changelog.

pandas DataFrame display renders like it does in Jupyter

July 30 - Aug 6, 2019: Version 2.103

Now when you call a pandas DataFrame, it will render the same way as it does in Jupyter.

New regions

July 30, 2019

Databricks is now available in eu-west-3 (Paris) for select workspaces only.

Databricks Runtime 5.5 with Conda (Beta)

July 23, 2019

Databricks Runtime with Conda is in Beta. The contents of the supported environments may change in upcoming Beta releases. Changes can include the list of packages or versions of installed packages. Databricks Runtime 5.5 with Conda is built on top of Databricks Runtime 5.5 LTS (EoS).

The Databricks Runtime 5.5 with Conda release adds a new notebook-scoped library API to support updating the notebook's Conda environment with a YAML specification (see Conda documentation).

See the complete release notes at Databricks Runtime 5.5 with Conda (EoS).

Set permissions on pools (Public Preview)

July 16 - 23, 2019: Version 2.102

The pool UI now supports setting permissions on who can manage pools and who can attach clusters to pools.

For details, see Pool permissions.

Databricks Runtime 5.5 for Machine Learning

July 15, 2019

Databricks Runtime 5.5 ML is built on top of Databricks Runtime 5.5 LTS (EoS). It contains many popular machine learning libraries, including TensorFlow, PyTorch, Keras, and XGBoost, and provides distributed TensorFlow training using Horovod.

This release includes the following new features and improvements:

- Added the MLflow 1.0 Python package

- Upgraded machine learning libraries

- TensorFlow upgraded from 1.12.0 to 1.13.1

- PyTorch upgraded from 0.4.1 to 1.1.0

- scikit-learn upgraded from 0.19.1 to 0.20.3

- Single-node operation for HorovodRunner

For details, see Databricks Runtime 5.5 LTS for ML (EoS).

Databricks Runtime 5.5

July 15, 2019

Databricks Runtime 5.5 is now available. Databricks Runtime 5.5 includes Apache Spark 2.4.3, upgraded Python, R, Java, and Scala libraries, and the following new features:

- Delta Lake on Databricks Auto Optimize GA

- Delta Lake on Databricks improved min, max, and count aggregation query performance

- Presto and Athena support for Delta tables on AWS S3 (Public Preview)

- Glue Catalog as Databricks metastore

- Faster model inference pipelines with improved binary file data source and scalar iterator pandas UDF (Public Preview)

- Secrets API in R notebooks

For details, see Databricks Runtime 5.5 LTS (EoS).

Keep a pool of instances on standby for quick cluster launch (Public Preview)

July 9 - 11, 2019: Version 2.101

To reduce cluster start time, Databricks now supports attaching a cluster to a pre-defined pool of idle instances. When attached to a pool, a cluster allocates its driver and worker nodes from the pool. If the pool does not have sufficient idle resources to accommodate the cluster's request, the pool expands by allocating new instances from the cloud provider. When an attached cluster is terminated, the instances it used are returned to the pool and can be reused by a different cluster.

Databricks does not charge DBUs while instances are idle in the pool. Instance provider billing does apply. See pricing.

For details, see Pool configuration reference.

Global series color

July 9 - 11, 2019: Version 2.101

You can now specify that the colors of a series should be consistent across all charts in your notebook. See Color consistency across charts.