Route optimization on serving endpoints

This article describes how to enable route optimization on your model serving or feature serving endpoints. Route optimized serving endpoints dramatically lower overhead latency and allow for substantial improvements in the throughput supported by your endpoint.

Route-optimized endpoints are queried differently from non-route-optimized endpoints, including using a different URL and authentication using OAuth tokens. See Query route-optimized serving endpoints for details.

What is route optimization?

When you enable route optimization on an endpoint, Databricks Model Serving improves the network path for inference requests, resulting in faster, more direct communication between your client and the model. This optimized routing unlocks higher queries per second (QPS) compared to non-optimized endpoints and provides more stable and lower latencies for your applications.

Route optimization is one of several strategies for optimizing production workloads. For a comprehensive guide to optimization techniques, see Optimize Model Serving endpoints for production.

Requirements

- Route optimization on model serving endpoints have the same requirements as non-route-optimized model serving endpoints.

- Route optimization on feature serving endpoints have the same requirements as non-route-optimized feature serving endpoints.

Enable route optimization on a model serving endpoint

- Serving UI

- REST API

- Python

- Databricks SDK

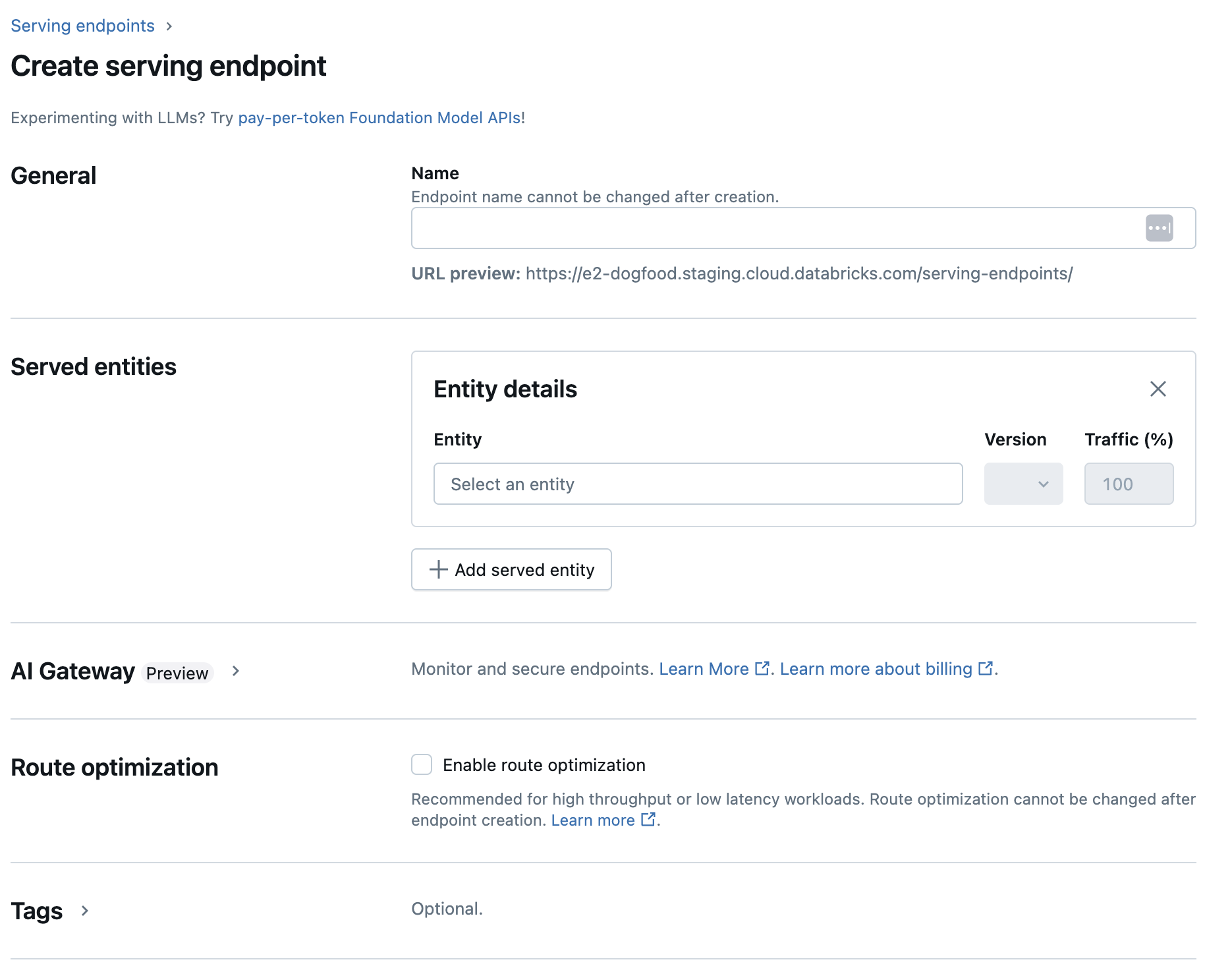

You can enable route optimization when you create a model serving endpoint using the Serving UI. You can only enable route optimization during endpoint creation, you can not update existing endpoints to be route optimized.

- In the sidebar, click Serving to display the Serving UI.

- Click Create serving endpoint.

- In the Route optimization section, select Enable route optimization.

- After your endpoint is created, Databricks sends you a notification about what is needed to query a route optimized endpoint.

To configure your serving endpoint for route optimization using the REST API, specify the route_optimized parameter during model serving endpoint creation. You can only specify this parameter during endpoint creation, you can not update existing endpoints to be route optimized.

POST /api/2.0/serving-endpoints

{

"name": "my-endpoint",

"config":

{

"served_entities":

[{

"entity_name": "ads1",

"entity_version": "1",

"workload_type": "CPU",

"workload_size": "Small",

"scale_to_zero_enabled": true,

}],

},

"route_optimized": true

}

If you use Python, you can use the following notebook to create a route optimized serving endpoint.

Create a route optimized serving endpoint using Python notebook

To configure your serving endpoint for route optimization using the Databricks SDK, specify the route_optimized parameter during model serving endpoint creation. You can only specify this parameter during endpoint creation, you can not update existing endpoints to be route optimized.

from databricks.sdk import WorkspaceClient

from databricks.sdk.service.serving import EndpointCoreConfigInput, ServedEntityInput

workspace = WorkspaceClient()

workspace.serving_endpoints.create(

name="my-serving-endpoint",

config = EndpointCoreConfigInput(

served_entities=[

ServedEntityInput(

entity_name="main.default.my-served-entity",

scale_to_zero_enabled=True,

workload_size="Small"

)

]

),

route_optimized=True

)

Enable route optimization on a feature serving endpoint

To use route optimization for Feature and Function Serving, specify the full name of the feature specification in the entity_name field for serving endpoint creation requests. The entity_version is not needed for FeatureSpecs.

POST /api/2.0/serving-endpoints

{

"name": "my-endpoint",

"config":

{

"served_entities":

[

{

"entity_name": "catalog_name.schema_name.feature_spec_name",

"workload_type": "CPU",

"workload_size": "Small",

"scale_to_zero_enabled": true

}

]

},

"route_optimized": true

}

Limitations

- Route optimization is only available for custom model serving endpoints and feature serving endpoints. Serving endpoints that use Foundation Model APIs or external models are not supported.

- Databricks in-house OAuth tokens are the only supported authentication for route optimization. Personal access tokens are not supported.