Databricks ライト

このドキュメントは廃止されており、更新されない可能性があります。 このコンテンツに記載されている製品、サービス、またはテクノロジはサポートされなくなりました。 「Databricks Light 2.4 延長サポート (EoS)」を参照してください。

DatabricksLightDatabricks は、オープンソースApache Spark ランタイムの パッケージです。これは、 Databricks Runtimeによって提供される高度なパフォーマンス、信頼性、またはオートスケールの利点を必要としないジョブのランタイム オプションを提供します。 特に、Databricks Light では以下はサポートされていません。

- Delta Lake

- オートスケールなどのオートパイロット機能

- Highly 並列, 汎用クラスター

- ノートブック、ダッシュボード、コラボレーション機能

- さまざまなデータソースとBIツールへのコネクタ

Databricks Light は、ジョブ (または「自動化されたワークロード」) のランタイム環境です。 Databricks Light クラスターでジョブを実行すると、ジョブ ライト コンピュートの価格が下がります。Databricks Light を選択できるのは、JAR、Python、または spark-submit ジョブを作成またはスケジュールし、そのジョブにクラスターをアタッチする場合のみです。Databricks Light を使用してノートブック ジョブまたは対話型ワークロードを実行することはできません。

Databricks Light は、他の Databricks ランタイムおよび価格階層で実行されているクラスターと同じワークスペースで使用できます。 開始するために別のワークスペースをリクエストする必要はありません。

Databricks Light の機能

Databricks Light ランタイムのリリース スケジュールは、Apache Spark ランタイムのリリース スケジュールに従います。 Databricks Light バージョンは、Apache Spark の特定のバージョンに基づいています。 詳細については、次のリリースノートを参照してください。

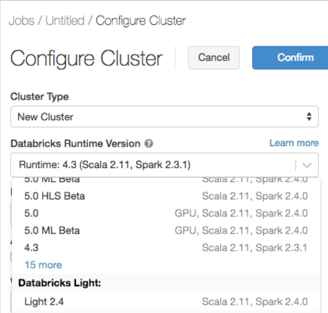

Databricks Light を使用してクラスターを作成する

ジョブ クラスターを作成するときは、[Databricks Databricks RuntimeVersion] ドロップダウンから Light バージョンを選択します。

プレビュー

プール バックアップされた Databricksジョブ クラスターでの Light のサポートは、パブリック プレビュー 段階です。