December 2020

These features and Databricks platform improvements were released in December 2020.

Releases are staged. Your Databricks account may not be updated until a week or more after the initial release date.

Databricks Runtime 7.5 GA

December 16, 2020

Databricks Runtime 7.5, Databricks Runtime 7.5 ML, and Databricks Runtime 7.5 for Genomics are now generally available.

For information, see the full release notes at Databricks Runtime 7.5 (EoS) and Databricks Runtime 7.5 for ML (EoS).

Existing Databricks accounts migrate to E2 platform today

December 16, 2020

Today we begin the migration of existing Databricks accounts to the E2 version of the platform, bringing new security features and the ability to create and manage multiple workspaces with ease. This first phase includes all invoiced accounts. Free Trial and “pay-as-you-go” accounts that are billed monthly by credit card will be migrated at a later date. Databricks is sending notifications to owners of all accounts being migrated. This migration makes no change to accounts that already have workspaces on the E2 platform.

Accounts that migrate today will use the new account console to create and manage workspaces and view usage, and can use the Account API to create and manage workspaces. Your existing workspaces will not be converted to the E2 platform, but any new workspace you create will be on E2.

For more information about managing accounts on E2, see Manage your Databricks account.

Jobs API now supports updating existing jobs

December 14, 2020

You can now use the Jobs API Update endpoint to update a subset of fields for a job. The update() endpoint supplements the existing Reset endpoint by allowing you to specify only the fields which should be added, changed, or deleted rather than overwriting all job settings. Using the Update endpoint is faster, easier, and safer when only some job settings need to be changed, particularly for bulk updates.

New global init script framework is GA

December 14, 2020

The new global init script framework, which was released as a Public Preview in July, is now generally available. The new framework brings significant improvements over legacy global init scripts:

- Init scripts are more secure, requiring admin permissions to create, view, and delete.

- Script-related launch failures are logged.

- You can set the execution order of multiple init scripts.

- Init scripts can reference cluster-related environment variables.

- Init scripts can be created and managed using the admin settings page or the new Global Init Scripts REST API.

Databricks recommends that you migrate existing legacy global init scripts to the new framework to take advantage of these improvements.

For details, see Global init scripts.

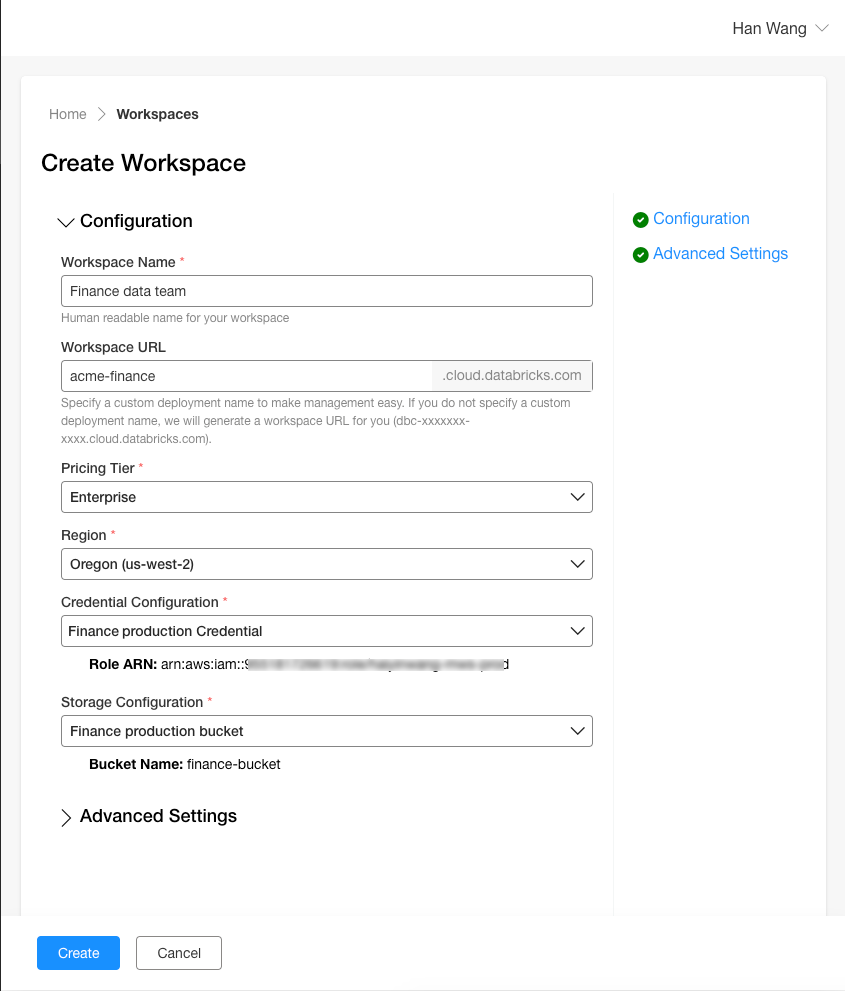

New account console enables customers on the E2 platform to create and manage multiple workspaces (Public Preview)

December 8, 2020

We are excited to announce the Public Preview of the new account console for accounts on the E2 version of the Databricks platform. The new account console gives you visibility across all of your workspaces in a single pane of glass, enabling you to:

- Create and manage the lifecycle of multiple workspaces.

- View your organization's spend on Databricks across all workspaces.

The new account console is available for customers who are on the next generation of Databricks' Enterprise Cloud Platform (E2). In the coming weeks we will also upgrade existing non-E2 Databricks accounts to enable you to take advantage of multiple workspaces for your teams and the account console to manage them. Stay tuned for more information about that migration.

For details, see Manage your Databricks account.

Databricks Runtime 7.5 (Beta)

December 3, 2020

Databricks Runtime 7.5, Databricks Runtime 7.5 ML, and Databricks Runtime 7.5 for Genomics are now available as Beta releases.

For information, see the full release notes at Databricks Runtime 7.5 (EoS) and Databricks Runtime 7.5 for ML (EoS).

Auto-AZ: automatic selection of availability zone (AZ) when you launch clusters

December 2-8, 2020: Version 3.34

For workspaces on the E2 version of the Databricks platform, you can now enable automatic selection of availability zone (AZ) when your users launch clusters (including job clusters). After you enable Auto-AZ using the Clusters API, Databricks chooses an AZ automatically based on available IPs in the workspace subnets, and retries in other AZs in case insufficient capacity errors are returned by AWS.

See Availability zones.

Jobs API end_time field now uses epoch time

December 2-8, 2020: Version 3.34

The Jobs API returns new data for the Runs Get and Runs List endpoints. The new end_time field returns the epoch time (milliseconds since 1/1/1970 UTC) when the run ended, for example 1605266150681.

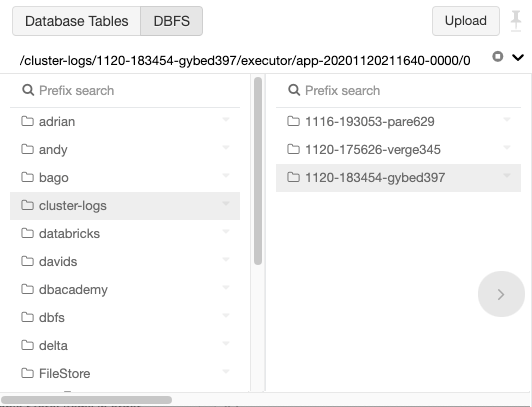

Find DBFS files using new visual browser

December 2-8, 2020: Version 3.34

You can now browse and search for DBFS objects in the Databricks workspace UI using the new DBFS file browser.

You can also upload files to DBFS using the new upload dialog in the browser.

This feature is disabled by default. An admin user must enable it using the Admin Console. See Manage the DBFS file browser.

Visibility controls for jobs, clusters, notebooks, and other workspace objects are now enabled by default on new workspaces

December 1-8, 2020: Version 3.34

Visibility controls, which were introduced in September, ensure that users can view only the notebooks, folders, clusters, and jobs that they have been given access to through workspace, cluster, or jobs access control. With this release, visibility controls are enabled by default for new workspaces. If your workspace was created before this release, an admin must enable them. See Access controls lists can no longer be disabled

Improved display of nested runs in MLflow

December 2-8, 2020: Version 3.34

We improved the display of nested runs in the MLflow runs table. Child runs now appear grouped beneath the root run.

Admins can now lock user accounts (Public Preview)

December 2-8, 2020: Version 3.34

Administrators can now set a user's status to inactive. An inactive user can't access the workspace, but their permissions and associations with objects such as running clusters or access to notebooks remain unchanged. You can manually deactivate users via the SCIM API or automatically deactivate users after a set period of inactivity. See Users API.

Updated NVIDIA driver

December 2-8, 2020: Version 3.34

GPU-enabled clusters now use NVIDIA driver version 450.80.02.

Use your own keys to secure notebooks (Public Preview)

December 1, 2020

By default, notebooks and secrets are encrypted in the Databricks control plane using a key unique to the control plane but not the workspace. Now you can specify your own encryption key to encrypt notebook and secret data in the control plane, a feature known as customer-managed keys for notebooks.

This feature is now in Public Preview. It requires that your account be on the E2 version of the Databricks platform or on a custom plan that has been enabled by Databricks for this feature.