May 2024

These features and Databricks platform improvements were released in May 2024.

Releases are staged. Your Databricks account might not be updated until a week or more after the initial release date.

Serverless firewall configuration now supports more compute types

May 31, 2024

Databricks now supports configuring firewalls for serverless jobs, notebooks, DLT pipelines, and model serving CPU endpoints access using Network connectivity configurations (NCCs). Account admins can create NCCs and attach them to workspaces to ensure secure and controlled access via stable IP addresses.

Databricks Runtime 15.0 series support ends

May 31, 2024

Support for Databricks Runtime 15.0 and Databricks Runtime 15.0 for Machine Learning ended on May 31. See Databricks support lifecycles.

Databricks Runtime 15.3 (Beta)

May 30, 2024

Databricks Runtime 15.3 and Databricks Runtime 15.3 ML are now available as Beta releases.

See Databricks Runtime 15.3 (EoS) and Databricks Runtime 15.2 for Machine Learning (EoS).

The compute metrics UI is now available on all Databricks Runtime versions

May 30, 2024

The compute metrics UI has been rolled out to all Databricks Runtime versions. Previously, these metrics were available only on compute resources running on Databricks Runtime 13.3 and above. See View compute metrics.

Improved search and filtering in notebook and SQL editor results tables

May 28, 2024

You can now easily search columns and select filter values from a dropdown list of existing values in the results tables within notebooks and the SQL editor.

New dashboard helps Databricks Marketplace providers monitor listing usage

May 28, 2024

The new Provider Analytics Dashboard enables Databricks Marketplace providers to monitor listing views, requests, and installs. The dashboard pulls data from the Marketplace system tables. See Monitor listing usage metrics using dashboards.

View system-generated federated queries in Query Profile

May 24, 2024

Lakehouse Federation now supports viewing system-generated federated queries and their metrics in the Query Profile. Click the federation scan node in the graph view to reveal the query pushed down into the data source. See View system-generated federated queries.

Compute plane outbound IP addresses must be added to a workspace IP allow list

May 24, 2024

If you configure IP access lists on your workspace, you must either add all public IPs that the compute plane uses to access the control plane to an allow list or configure back-end PrivateLink. This change will impact all new workspaces on July 29 2024, and existing workspaces on August 26 2024. For more information, see the Databricks Community post.

For example, when you configure a customer-managed VPC, subnets must have outbound access to the public network using a NAT gateway or a similar approach. Those public IPs must be present in an allow list. See Subnets. Alternatively, if you use a Databricks-managed VPC and you configure the managed NAT gateway to access public IPs, those IPs must be present in an allow list.

See Configure IP access lists for workspaces.

OAuth is supported in Lakehouse Federation for Snowflake

May 24, 2024

Unity Catalog now allows you to create Snowflake connections using OAuth. See Run federated queries on Snowflake (OAuth).

Bulk move and delete workspace objects from the workspace browser

May 24, 2024

You can now select multiple items in the workspace to move or delete. When multiple objects are selected, an action bar appears and has options to move or delete items. Additionally, you can select multiple items using your mouse and drag them to a new location. Existing permissions on objects still apply during bulk move and delete operations.

Unity Catalog objects are available in recents and favorites

May 23, 2024

You can now find Unity Catalog objects like catalogs and schemas in your recents list. You can also favorite Unity Catalog objects in Catalog Explorer and the schema browser and find favorited objects on the workspace homepage.

New dbt-databricks connector 1.8.0 adopts decoupled dbt architecture

May 23, 2024

DBT-Databricks connector 1.8.0 is the first version to adopt the new decoupled dbt architecture. Rather than depend on dbt-core to free customers from having to specify versions for both libraries, the connector now depends on a shared abstraction layer between the adapter and dbt-core. As a result, the connector no longer needs to match the Databricks feature version to that of dbt-core, and is free to adopt semantic versioning. This means that connector developers no longer need to release significant features like compute-per-model as patches.

This release also brings the following:

- Improvements to the declaration and operation of materialized views and streaming tables, including the ability to schedule automatic refreshes.

- Support for Unity Catalog securable object tags. To distinguish them from dbt tags, which are metadata that is often used for selecting models in a dbt operation, these tags are named

databricks_tagsin the model configuration. - Several improvements to metadata processing performance.

New compliance and security settings APIs (Public Preview)

May 23, 2024

Databricks has introduced new APIs for the compliance security profile, enhanced security monitoring, and automatic cluster update settings in workspaces. See:

Databricks Runtime 15.2 is GA

May 22, 2024

Databricks Runtime 15.2 and Databricks Runtime 15.2 ML are now generally available.

See Databricks Runtime 15.2 (EoS) and Databricks Runtime 15.2 for Machine Learning (EoS).

New Tableau connector for Delta Sharing

May 22, 2024

The new Tableau Delta Sharing Connector simplifies Tableau Desktop access to data that was shared with you using the Delta Sharing open sharing protocol. See Tableau: Read shared data.

New deep learning recommendation model examples

May 22, 2024

Databricks has published two new examples illustrating modern deep learning recommendation models, including the two-tower model and Meta's DLRM. To learn more about deep learning recommendation models, see Train recommender models.

Bind storage credentials and external locations to specific workspaces (Public Preview)

May 22, 2024

You can now bind storage credentials and external locations to specific workspaces, preventing access to those objects from other workspaces. This feature is especially helpful if you use workspaces to isolate user data access, for example, if you have separate production and development workspaces or a dedicated workspace for handling sensitive data.

For more information, see Assign an external location to specific workspaces and Assign a storage credential to specific workspaces.

Git folders are GA

May 22, 2024

Git folders are now generally available. See Databricks Git folders. If you are a user of the former “Repos” feature, see What happened to Databricks Repos?.

Pre-trained models in Unity Catalog (Public Preview)

May 21, 2024

Databricks now includes a selection of high-quality, pre-trained generative AI models in Unity Catalog. These pre-trained models allow you to access state-of-the-art AI capabilities for your inference workflows, saving you the time and expense of building your own custom models. See Access generative AI and LLM models from Unity Catalog.

Mosaic AI Vector Search is GA

May 21, 2024

Mosaic AI Vector Search is now generally available. See Mosaic AI Vector Search.

The compliance security profile now supports AWS Graviton instance types

May 21, 2024

You can now use AWS Graviton instance types in workspaces that enable the compliance security profile or enhanced security monitoring. See Compliance security profile and Enhanced security monitoring.

Databricks Assistant autocomplete (Public Preview)

May 20, 2024

Databricks Assistant autocomplete provides AI-powered suggestions in real-time as you type in notebooks, queries, and files. To enable it, go to Settings > Developer > Experimental Features and toggle Databricks Assistant autocomplete. For details, see AI-based autocomplete.

Meta Llama 3 support in Foundation Model Training

May 20, 2024

Foundation Model Training now supports Meta Llama 3. See Foundation Model Fine-tuning.

New changes to Git folder UI

May 17, 2024

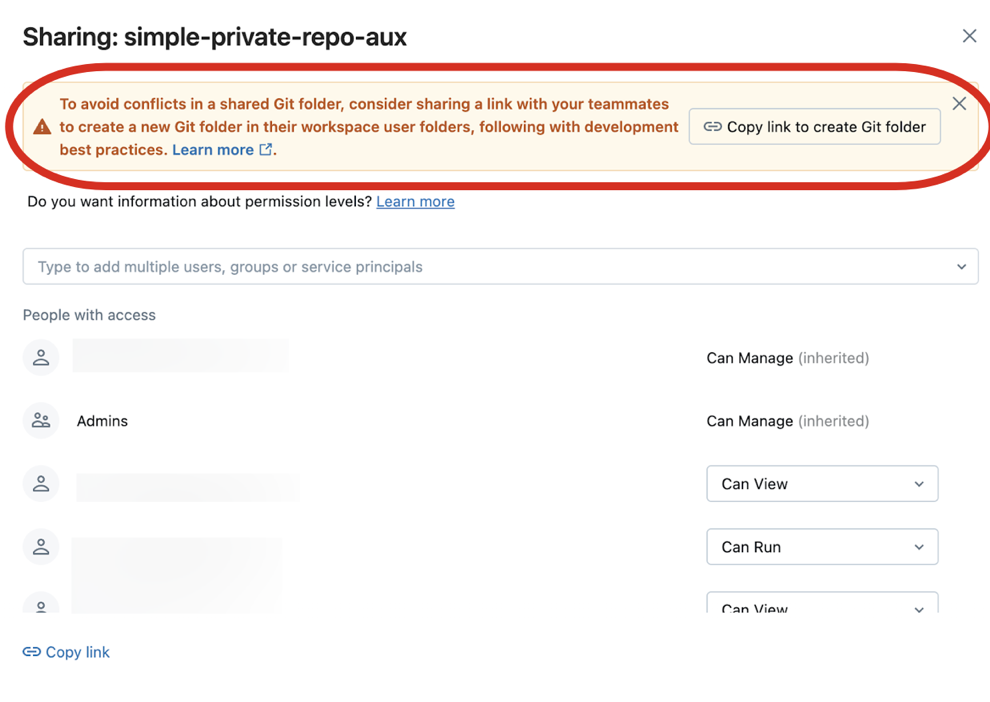

You may notice some changes to the user interface for Git folder interactions. We've added the following:

-

When you share a Git folder, you will see a new alert in a banner that prompts you to Copy link to create Git folder. When you click the button, a URL is copied to your local clipboard, which you can send to another user. When that recipient user loads that URL in a browser, the user is taken to the workspace where they can create their own Git folder cloned from the same remote Git repository. When the recipient accesses the URL, they will see a Create Git folder dialog in the UI that is pre-populated with the values taken from your Git folder.

-

Similarly, a new button, Create Git folder, appears on a new alert banner when you view a Git folder created by another user. Click this button to create your own Git folder for the same Git repository, based on the pre-populated values in the Create Git folder dialog.

Compute now uses EBS GP3 volumes for autoscaling local storage

May 15, 2024

Starting May 15, 2024, Databricks began updating new and existing compute to use Amazon EBS GP3 volumes for autoscaling local storage. This change may lead to compute launch failures for customers that have not increased GP3 EBS volume quota to match their quotas for older-generation ST1 EBS volumes.

To avoid compute launch failures, ensure that your AWS accounts have sufficient quota to use GP3 volumes. You can request quota increases from AWS if necessary.

Unified Login now supported with AWS PrivateLink (Private Preview)

May 15, 2024

Unified login allows you to manage one single sign-on (SSO) configuration in your account that is used for the account and Databricks workspace. Unified login is now supported with private connectivity using AWS PrivateLink between users and their Databricks workspaces. See Enable unified login and Configure classic private connectivity to Databricks. You must contact your Databricks account team to request access to this preview.

Foundation Model Training (Public Preview)

May 13, 2024

Databricks now supports Foundation Model Training. With Foundation Model Training, you use your own data to customize a foundation model to optimize its performance for your specific application. By fine-tuning or continuing training of a foundation model, you can train your own model using significantly less data, time, and compute resources than training a model from scratch. The training data, checkpoints, and fine-tuned model all reside on the Databricks platform and are integrated with its governance and productivity tools.

For details, see Foundation Model Fine-tuning.

Allow users to copy data to the clipboard from results table

May 9, 2024

Admins can now enable or disable the ability for users to copy data to their clipboard from results tables. Previously, this feature was limited to notebooks. Now, this setting applies to the following interfaces:

- Notebooks

- Dashboards

- Genie Spaces

- Catalog Explorer

- File editor

- SQL editor

Attribute tag values for Unity Catalog objects can now be 1000 characters long (Public Preview)

May 8, 2024

Attribute tag values in Unity Catalog can now be up to 1000 characters long. The character limit for tag keys remains 255. See Apply tags to Unity Catalog securable objects.

New Previews page

May 8, 2024

Enable and manage access to Databricks previews on the new Previews page. See Manage Databricks previews.

New capabilities for Mosaic AI Vector Search

May 8, 2024

New capabilities include the following:

- PrivateLink and IP access lists are now supported.

- Customer Managed Keys (CMK) are now supported on endpoints created on or after May 8, 2024. Vector Search support for CMK is in Public Preview.

- Improved audit logs and cost attribution tracking. See Audit log reference.

- You can now save generated embeddings as a Delta table. See Create a vector search index.

Credential passthrough and Hive metastore table access controls are deprecated

May 7, 2024

Credential passthrough and Hive metastore table access controls are deprecated on Databricks Runtime 15.0 and support will be removed in an upcoming Databricks Runtime version.

Upgrade to Unity Catalog to simplify the security and governance of your data by providing a central place to administer and audit data access across multiple workspaces in your account. See What is Unity Catalog?.

Databricks JDBC driver 2.6.38

May 6, 2024

We have released version 2.6.38 of the Databricks JDBC driver (download). This release adds the following new features and enhancements:

- Native Parameterized Query support if the server uses

SPARK_CLI_SERVICE_PROTOCOL_V8. The limit of the number of parameters in a query is256in the native query mode. - Data ingestion using a

Unity Catalogvolume support. See more aboutUnity Catalogvolumes in Connect to cloud object storage using Unity Catalog. To use this, setUseNativeQueryto1. QueryProfileinterface added toIHadoopStatementallows applications to retrieve a query'squery id. Thequery idcan be be used to fetch the query's metadata using Databricks REST APIs.- Async operations for metadata Thrift calls if the server uses

SPARK_CLI_SERVICE_PROTOCOL_V9. To use this feature, setEnableAsyncModeForMetadataOperationproperty to1. - JWT assertion support. The connector now supports JWT assertion OAuth using client credentials. To do this, set the

UseJWTAssertionproperty to1.

This release also resolves the following issues:

- Jackson libraries updates. The connector now uses the following libraries for the Jackson JSON parser: jackson-annotations 2.16.0 (previously 2.15.2), jackson-core 2.16.0 (previously 2.15.2), jackson-databind-2.16.0 (previously 2.15.2)

- The connector contains unshaded class files in META-INF directory.

Unified Login now supported with AWS PrivateLink (Public Preview)

May 10, 2024

Unified login allows you to manage one single sign-on (SSO) configuration in your account that is used for the account and Databricks workspace. Unified login is now supported with private connectivity between users and their Databricks workspaces. See Enable unified login and Configure classic private connectivity to Databricks. You must contact your Databricks account team to request access to this preview.

Databricks Runtime 15.2 (Beta)

May 2, 2024

Databricks Runtime 15.2 and Databricks Runtime 15.2 ML are now available as Beta releases.

See Databricks Runtime 15.2 (EoS) and Databricks Runtime 15.2 for Machine Learning (EoS).

Notebooks now detect and auto-complete column names for Spark Connect DataFrames

May 1, 2024

Databricks notebooks now automatically detect and display the column names in Spark Connect DataFrames and allow you to use auto-complete to select columns.