August 2022

These features and Databricks platform improvements were released in August 2022.

Releases are staged. Your Databricks account may not be updated until a week or more after the initial release date.

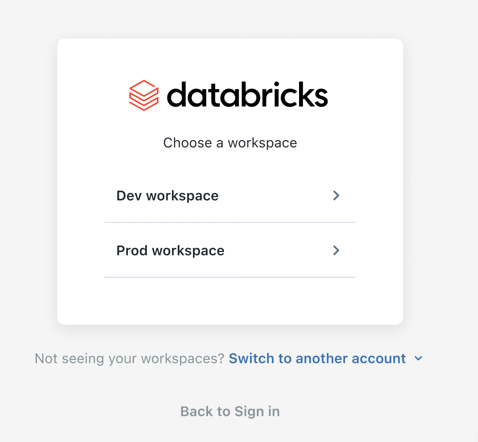

Account users can access the account console

August 1-31, 2022

Account users can access the Databricks account console to view a list of their workspaces. Account users can only view workspaces that they have been granted access to. See Manage your Databricks account.

Users from workspaces are synced automatically to your account as account users. All existing workspace users and service principals are synced automatically to your account as account-level users and service principals. See Sync identities from your identity provider.

Databricks ODBC driver 2.6.26

August 29, 2022

We have released version 2.6.26 of the Databricks ODBC driver (download). This release updates query support. You can now asynchronously cancel queries on HTTP connections upon API request.

This release also resolves the following issue:

- When using custom queries in Spotfire, the connector becomes unresponsive.

Databricks JDBC driver 2.6.29

August 29, 2022

We have released version 2.6.29 of the Databricks JDBC driver (download). This release resolves the following issues:

- When using an HTTP proxy with Cloud Fetch enabled, the connector does not return large data set results.

- Minor text issues in Databricks License Text. Documentation links were missing.

- The JAR file names were incorrect. Instead of SparkJDBC41.jar it should have been DatabricksJDBC41.jar. Instead of SparkJDBC42.jar, it should have been DatabricksJDBC42.jar.

Databricks Feature Store client now available on PyPI

August 26, 2022

The Feature Store client is now available on PyPI. The client requires Databricks Runtime 9.1 LTS or above, and can be installed using:

%pip install databricks-feature-store

The client is already packaged with Databricks Runtime for Machine Learning 9.1 LTS and above.

The client cannot be run outside of Databricks; however, you can install it locally to aid in unit testing and for additional IDE support (for example, autocompletion). For more information, see Databricks Feature Store Python client

Unity Catalog is GA

August 25, 2022

Unity Catalog is generally available. For detailed feature announcements and limitations, see Unity Catalog GA release note.

Delta Sharing is GA

August 25, 2022

Delta Sharing is now generally available, beginning with Databricks Runtime 11.1. For details, see What is Delta Sharing?.

- Databricks-to-Databricks Delta Sharing is fully managed without the need for exchanging tokens.

- Create and manage providers, recipients, and shares with a simple-to-use UI.

- Create and manage providers, recipients, and shares with SQL and REST APIs with full CLI and Terraform support.

- Query changes to data, or share incremental versions with Change Data Feeds.

- Restrict recipient access to downloading credential files or querying data using IP access lists and region restrictions.

- Using Delta Sharing to share data within the same Databricks account is enabled by default.

- Enforce separation of duties by delegating management of Delta Sharing to non-admins.

Databricks Runtime 11.2 (Beta)

August 23, 2022

Databricks Runtime 11.2, 11.2 Photon, and 11.2 ML are now available as Beta releases.

See the full release notes at Databricks Runtime 11.2 (EoS) and Databricks Runtime 11.2 for Machine Learning (EoS).

Reduced message volume in the DLT UI for continuous pipelines

August 22-29, 2022: Version 3.79

With this release, the state transitions for live tables in a DLT continuous pipeline are displayed in the UI only until the tables enter the running state. Any transitions related to successful recomputation of the tables are not displayed in the UI, but are available in the DLT event log at the METRICS level. Any transitions to failure states are still displayed in the UI. Previously, all state transitions were displayed in the UI for live tables. This change reduces the volume of pipeline events displayed in the UI and makes it easier to find important messages for your pipelines. To learn more about querying the event log, see Pipeline event log.

Easier cluster configuration for your DLT pipelines

August 22-29, 2022: Version 3.79

You can now select a cluster mode, either autoscaling or fixed size, directly in the DLT UI when you create a pipeline. Previously, configuring an autoscaling cluster required changes to the pipeline's JSON settings. For more information on creating a pipeline and the new Cluster mode setting, see Run a pipeline update.

Orchestrate dbt tasks in your Databricks workflows (Public Preview)

August 22-29, 2022: Version 3.79

You can run your dbt core project as a task in a Databricks job with the new dbt task, allowing you to include your dbt transformations in a data processing workflow. For example, your workflow can ingest data with Auto Loader, transform the data with dbt, and analyze the data with a notebook task. For more information about the dbt task, including an example, see Use dbt transformations in Lakeflow Jobs. For more information on creating, running, and scheduling a workflow that includes a dbt task, see Lakeflow Jobs.

Users can be members of multiple Databricks accounts

August 25, 2022

A user can now be a member of more than one Databricks account. Previously, a user was restricted to only being a member of one Databricks account. A user can only be an account owner of one Databricks account. To learn more about the Databricks identity management model, see Manage users, service principals, and groups.

Identity federation is GA

August 25, 2022

Identity federation simplifies Databricks administration by enabling you to assign account-level users, service principals, and groups to identity-federated workspaces. You can now configure and manage all of your users, service principals, and groups once in the account console, rather than repeating configuration separately in each workspace. To learn more about identity federation, see Assign identities to workspaces. To get started, see Identity federation.

Partner Connect supports connecting to Stardog

August 24, 2022

You can now easily create a connection between Stardog and your Databricks workspace using Partner Connect. Stardog provides a knowledge graph platform to answer complex queries across data silos.

Databricks Feature Store integration with Serverless Real-Time Inference

August 22-29, 2022: Version 3.79

Databricks Feature Store now supports automatic feature lookup for Serverless Real-Time Inference. For details, see Model Serving with automatic feature lookup.

Additional data type support for Databricks Feature Store automatic feature lookup

August 22-29, 2022: Version 3.79

Databricks Feature Store now supports BooleanType for automatic feature lookup. See Model Serving with automatic feature lookup.

Bring your own key: Encrypt Git credentials

August 23-29, 2022

You can use an encryption key for Git credentials for Databricks Repos.

Cluster UI preview and access mode replaces security mode

August 19, 2022

The new Create Cluster UI is in Preview. See Compute configuration reference.

Unity Catalog limitations (Public Preview)

August 16, 2022

- Scala, R, and workloads using the Machine Learning Runtime are supported only on clusters using the single user access mode. Workloads in these languages do not support the use of dynamic views for row-level or column-level security.

- Shallow clones are not supported when using Unity Catalog as the source or target of the clone.

- Bucketing is not supported for Unity Catalog tables. Commands trying to create a bucketed table in Unity Catalog will throw an exception.

- Overwrite mode for DataFrame write operations into Unity Catalog is supported only for Delta tables, not for other file formats. The user must have the

CREATEprivilege on the parent schema and must be the owner of the existing object. - Streaming currently has the following limitations:

- It is not supported in clusters using shared access mode. For streaming workloads, you must use single user access mode.

- Asynchronous checkpointing is not yet supported.

- Streaming queries lasting more than 30 days on all puporse or jobs clusters will throw an exception. For long running streaming queries, configure automatic job retries.

- Referencing Unity Catalog tables from DLT pipelines is currently not supported.

- Groups previously created in a workspace cannot be used in Unity Catalog GRANT statements. This is to ensure a consistent view of groups that can span across workspaces. To use groups in GRANT statements, create your groups in the account console and update any automation for principal or group management (such as SCIM, Okta and Microsoft Entra ID connectors, and Terraform) to reference account endpoints instead of workspace endpoints.

Unity Catalog is available in the following regions:

us-west-2us-east-1us-east-2ca-central-1eu-west-1eu-west-2eu-central-1ap-south-1ap-southeast-2ap-southeast-1ap-northeast-2ap-northeast-1

To use Unity Catalog in another region, contact your account team.

Serverless Real-Time Inference in Public Preview

August 16, 2022

Serverless Real-Time Inference processes your machine learning models using MLflow and exposes them as REST API endpoints. This functionality uses Serverless compute, which means that the endpoints and associated compute resources are managed and run in the Databricks cloud account. Usage and storage costs incurred are currently free of charge, but Databricks will provide notice when charging begins.

Workspace admins must enable Serverless Real-Time Inference in your workspace for you to use this feature.

To participate in the Serverless Real-Time Inference public preview, contact your Databricks account team.

Serverless SQL warehouses improvements

August 15, 2022

Improvements to Serverless SQL warehouses:

- Worker nodes are private, which means they do not have public IP addresses.

- Serverless SQL warehouses use a private network for communication between the Databricks control plane and the serverless compute plane, which are both in the Databricks AWS account.

- When reading or writing to Amazon S3 buckets in the same AWS region as your workspace, Serverless SQL warehouses now use direct access to S3 using AWS gateway endpoints. This applies when a Serverless SQL warehouse reads and writes to your workspace's root S3 bucket in your AWS account and to other S3 data sources in the same region.

Before you create Serverless SQL warehouses, an admin must enable them for the workspace. For more architectural information, see Serverless compute plane.

Share VPC endpoints among Databricks accounts

August 15, 2022

If you have multiple workspaces that share the same customer-managed VPC, you can optionally share AWS VPC endpoints and Databricks VPC endpoint registrations. Starting today, you can now share AWS VPC endpoints among multiple Databricks accounts as long as the workspaces that you intend to use them use the same customer-managed VPC. You must register the AWS VPC endpoints in each Databricks account. See Configure classic private connectivity to Databricks.

AWS PrivateLink private access level ANY is deprecated

August 15, 2022

To improve security of workspaces with AWS PrivateLink feature enabled, Databricks deprecated the ANY private access level, which performs no filtering of which VPC endpoints can connect to your workspace using PrivateLink. Historically, the private access level default was ANY. The new default is ACCOUNT, which limits connections to those VPC endpoints that are registered in your Databricks account. You can no longer create a new private access settings with the ANY private access level nor update one to change it to ANY.

For workspaces that already use the ANY private access level.

- There is nothing you must do immediately.

- For better security, Databricks strongly encourages you to update each workspace's private access settings level to one of the other levels:

ACCOUNT(all registered VPC endpoints in your account) orENDPOINT(specific registered VPC endpoints). Make this change in the account console or using the Account API. - If you use the account console to update the private access settings for any fields, you must change the private access level to a non-deprecated value. To make changes to other fields but not change the

ANYprivate access level yet, instead use the Account API.

Improvements to AWS PrivateLink connectivity

August 9, 2022

There are several improvements to AWS PrivateLink connectivity:

- You no longer need to contact Databricks to enable PrivateLink on your account.

- You can use the account console to create or update a workspace with PrivateLink connectivity.

- AWS VPC endpoints to Databricks automatically and quickly transition to the Available state.

- For a new VPC endpoint, you can now enable private DNS during creation rather than extra steps afterward. This simplifies enablement of PrivateLink in the AWS Console as well as using Terraform and other automation tools.

See Configure classic private connectivity to Databricks.

Improved workspace search is now GA

August 9, 2022

You can now search for notebooks, libraries, folders, files, and repos by name. You can also search for content within a notebook and see a preview of the matching content. Search results can be filtered by type. See Search for workspace objects.

Use generated columns when you create DLT datasets

August 8-15, 2022: Version 3.78

You can now use generated columns when you define tables in your DLT pipelines. Generated columns are supported by the DLT Python and SQL interfaces.

Improved editing for notebooks with Monaco-based editor (Experimental)

August 8-15, 2022

A new Monaco-based code editor is available for Python notebooks. To enable it, check the option Turn on the new notebook editor on the Editor settings tab on the User Settings page.

The new editor includes parameter type hints, object inspection on hover, code folding, multi-cursor support, column (box) selection, and side-by-side diffs in the notebook version history.

You can share feedback with the Give feedback link on the User Settings page.

Compliance controls FedRAMP Moderate, PCI-DSS, and HIPAA (GA)

August 4, 2022

The compliance controls for FedRAMP Moderate, PCI-DSS, and HIPAA are now generally available. They all require use of the compliance security profile.

Add security controls with the compliance security profile (GA)

August 4, 2022

The compliance security profile is now generally available. If a Databricks workspace enables the profile, the workspace has additional monitoring, enforced instance types for inter-node encryption, a hardened compute image, and other features.

The compliance security profile includes controls that help meet certain security requirements in some compliance standards. Enabling the compliance security profile is required to use Databricks to process data that is regulated under the following compliance standards: FedRAMP Moderate, PCI-DSS, and HIPAA.

However, you can enable the compliance security profile for its enhanced security features without the need to conform to any compliance standard.

Add image hardening and monitoring agents with enhanced security monitoring (GA)

August 4, 2022

Enhanced security monitoring provides an enhanced hardened disk image (a CIS-hardened Ubuntu Advantage AMI) and additional security monitoring agents that generate logs that you can review.

Databricks Runtime 10.3 series support ends

August 2, 2022

Support for Databricks Runtime 10.3 and Databricks Runtime 10.3 for Machine Learning ended on August 2. See Databricks support lifecycles.

DLT now supports refreshing only selected tables in pipeline updates

August 2-24, 2022

You can now start an update for only selected tables in a DLT pipeline. This feature accelerates testing of pipelines and resolution of errors by allowing you to start a pipeline update that refreshes only selected tables. To learn how to start an update of only selected tables, see Run a pipeline update.

Job execution now waits for cluster libraries to finish installing

August 1, 2022

When a cluster is starting, your Databricks jobs now wait for cluster libraries to complete installation before executing. Previously, job runs would wait for libraries to install on all-purpose clusters only if they were specified as a dependent library for the job. For more information on configuring dependent libraries for tasks, see Configure and edit tasks in Lakeflow Jobs.